Video games are one of the world’s newest art forms. Despite being among the most beautiful and technically challenging creative mediums, they still lack the kind of prestige bestowed upon other forms of art such as films or novels.

This is a shame because what is required to produce a virtual world is nothing short of miraculous. Video game technologies tie in knowledge from a myriad of disciplines including mathematics, physics, and of course, an intimate familiarity with computer hardware itself. That’s not even to mention the layers of artistry involved in game visuals, narrative architecture, and gameplay design itself.

More so than any other medium, video games are capable of expanding the human experience. They can deliver perspectives and sensations achievable only within a game’s virtual environment. To do so, game developers not only leverage the bleeding edge of computing but in many cases push that edge out themselves.

The stories of the gaming and computing industries are deeply intertwined and symbiotic, and it’s only a matter of time before gaming’s impact on digital technologies is fully internalized. It is our hope that this essay will play a small part in highlighting some of the terrific achievements, across both hardware and software, that were brought on thanks to the gaming industry.

The Latest and Greatest in Gaming

It’s often underappreciated just how big the gaming industry truly is. Within the United States, video games generated an annual revenue of nearly $100 billion, while globally the industry rakes in more than twice as much. This makes the global gaming industry five times bigger than Hollywood, bigger than the film and music industries combined.

Behind the production of many games are teams comprised of dozens of engineers and artists who often spend years laboring over a game before it is released. Many games produced by top game studios, referred to as “triple-A studios”, often have production budgets in the tens of millions of dollars. However, some of the most anticipated forthcoming games could spend orders of magnitude more than that. Recent rumors have circulated that Call of Duty: Modern Warfare 3 could end up raking up a $1 billion cost of production.

All this investment and talent has produced a truly remarkable technology — computer engines capable of producing real-time simulations of the world that have found tremendous relevance far outside of just games alone. Everyone from commercial pilots, who rely on flight simulators for training, to budding surgeons, to even architects are increasingly finding simulations enabled by game engines to be tremendously useful to their crafts. The 2023 film Gran Turismo, for example, which documented how a pro-gamer skilled at racing games was able to transfer his skills directly into actual racing, is a case in point.

The ability to transfer skills gained in the virtual realm to skills in the physical realm will likely accelerate as the realism achieved by top-of-the-line game engines improves with time. In 2022, Unreal Engine 5 stunned the world with its ability to achieve real-time photorealistic graphics renders.

Source: Unreal Engine

As graphics hardware continues to improve, the prospects for virtual simulators like these seem endless. However, to truly appreciate what will be required to continue improving the quality of such simulators, let’s take a look at the scope of the technical work required to produce a game engine.

What’s Inside a Game Engine

The majority of two or three-dimensional games are real-time interactive simulations. This means they take place in a virtual world whose state must be generated and updated in real time.

For games striving for a high degree of realism, like modern first-person shooters, there are many considerations that go into making the world appear true-to-life. The game must have a model of real-world physics, and remain vigilant to ensure that behaviors conform to physical laws. It must also have a model of how light works, tracking the location of different light sources and how they affect objects perceived by the player.

Not only must the game encode all this information in a mathematical model of the world, it needs to update these aspects at speeds mimicking reality. For most realistic games, this means the screen must be updated at least 24 times per second to give the illusion of motion. However, most screens have refresh rates at 30 or 60 Hz, meaning they refresh 30 or 60 times per second, so the state updates need to happen even faster than that.

In charge of managing all of this is the game engine. Essentially the operating system for the game world, the game engine is responsible for continuously updating and re-rendering the game world over and over again, in a loop, to determine the state of the world at each point in time.

In the early days of gaming, when most video games were 8-bit platformers like the Mario Bros, the logic for all of these considerations could be coded from scratch for each new game. The original Mario Bros had something like 22,000 lines of code. Even by 1994, game developer Chris Sawyer had to spend two years coding the game Roller Coaster Tycoon almost entirely in assembly code.

However, as hardware improved and visuals became more complex, coding all the physics and rendering logic from scratch became a massive undertaking. Rather than reinventing the wheel each time, game developers began producing game engines — programs that had much of the required game logic pre-established, which game designers could come in and build unique games on top.

Today, the biggest state-of-the-art game engines comprise millions of lines of code. Unreal Engine 5, which takes up around 115 GB, is estimated to comprise up to 16 million lines — and that’s just the engine that runs the game logic. On top of this, modern games like Mass Effect, BioShock, Gears of War, Fortnite, all of which are built on top of Unreal Engine, comprise hundreds of thousands or millions of lines more.

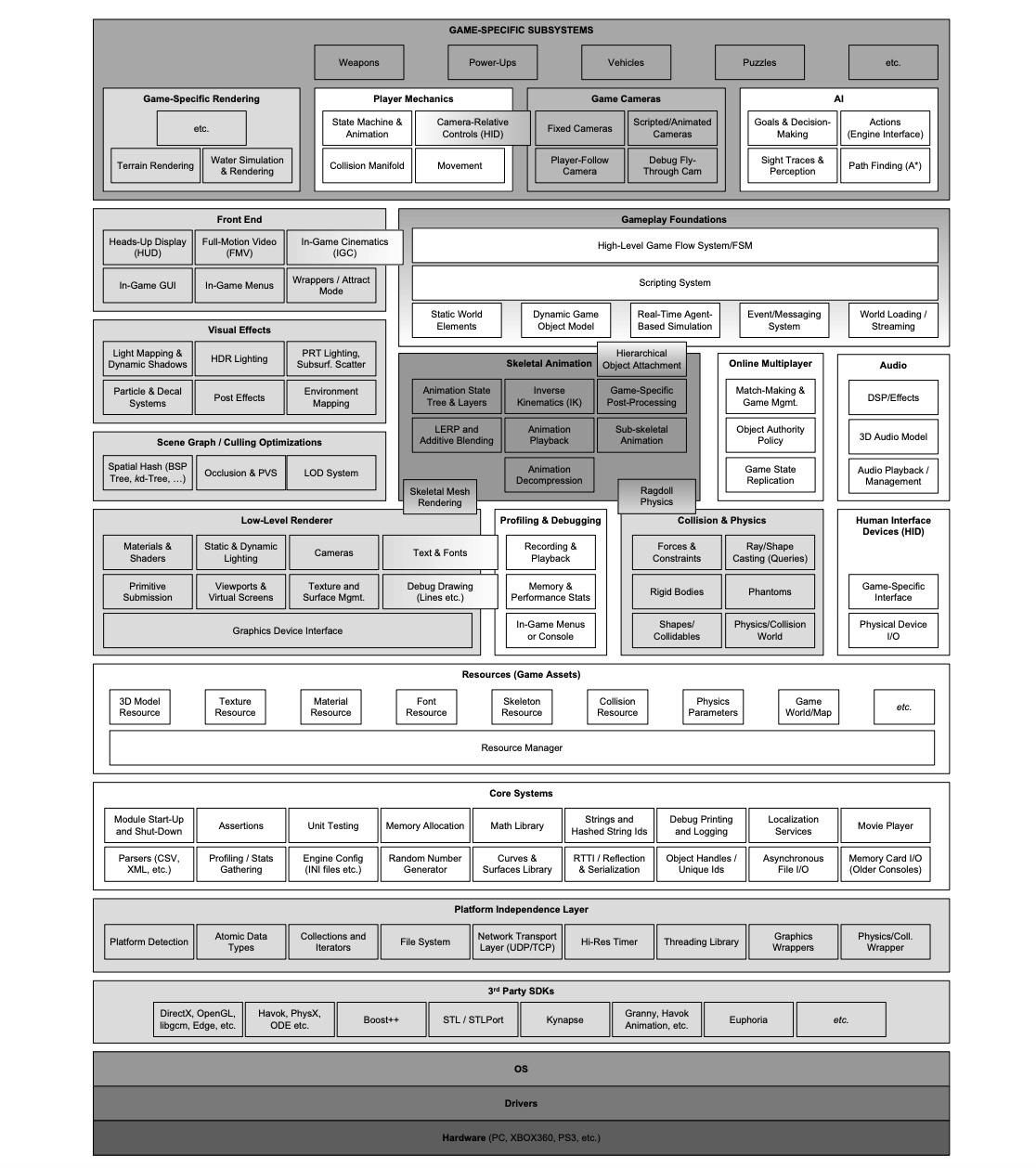

Clearly, game engines are massive with many moving parts. However, there are a few key subsystems that comprise the bulk of the work. These are the gameplay foundation layer, the physics simulator, and collision system, and the rendering engine.

Source: Run time game engine architecture, Jason Gregory

Gameplay Foundations

The gameplay foundations layer is what is in charge of executing all the game-specific logic. It’s what dictates what actually happens in the game, the flow of events, the game-specific rules, and so on.

Essentially, you can think of this layer as the UX of the game. It keeps track of all of the game objects from the background scenes and geometries, to dynamic objects like chairs, cans, road-side rocks, playable characters, and non-playable characters. It also handles the event system which determines how and when these different objects interact within the game.

By the 2010s, the average video game had something like 10,000 active gameplay objects to keep track of and update. Of course, every object that is updated usually touches 5-10 other objects, which then must also be updated. As you can imagine, this amounts to a lot of compute required, but actually, it’s only a tiny fraction of the computation done by parts of the game engine like the physics simulator.

Physics System

The physics system is one of the core parts of the game engine. It contains within it many of the physical laws that govern the real world, everything from gravity’s rate of acceleration — 9.8 m/s2 — to Newton’s laws of motion. The physics system handles the positions of the objects in the world based on how quickly they are moving. This means it must keep track of the velocities of different objects and continuously update their locations.

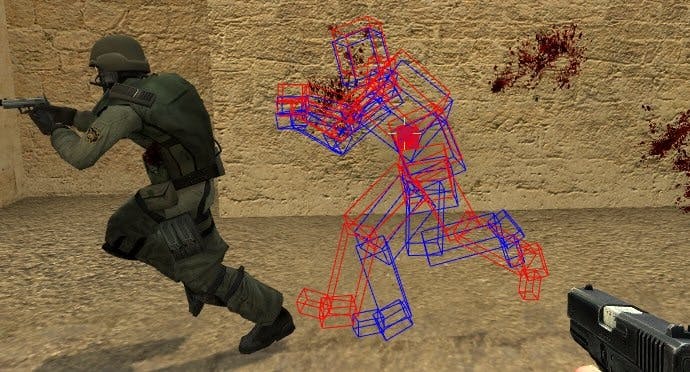

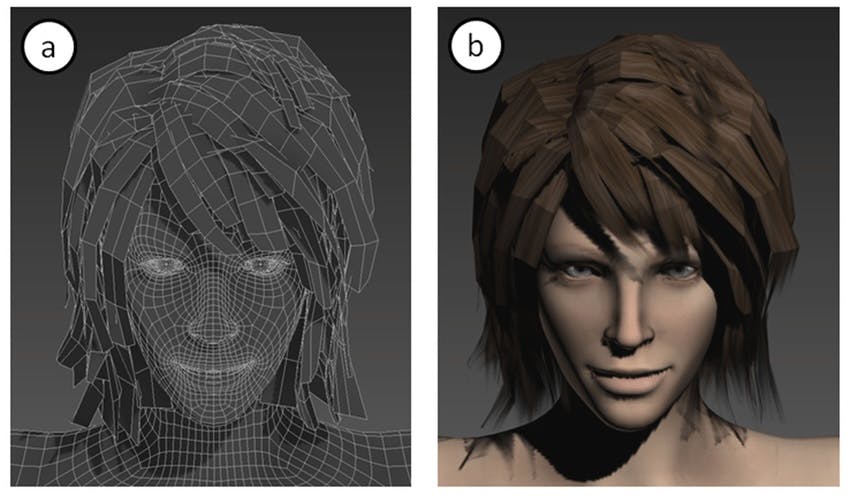

More importantly, and perhaps most critically to gameplay, the physics simulator handles what happens when two objects collide. Now, determining when objects are colliding in the first place is a challenge within itself. Most game object models, like humanoid players, are very complex figures made up of many many different tiny surfaces. It would be extremely computationally intensive to count whether, at any point, any of those surfaces are intersecting with another object like a bullet or a wall. As a result, most games simplify object models to more basic shapes, like boxes. This limits the surfaces the collision system needs to keep track of, but it’s still a lot to do calculations on all the possible surfaces that exist in a single frame of a game.

Source: Hitboxes, Technotification

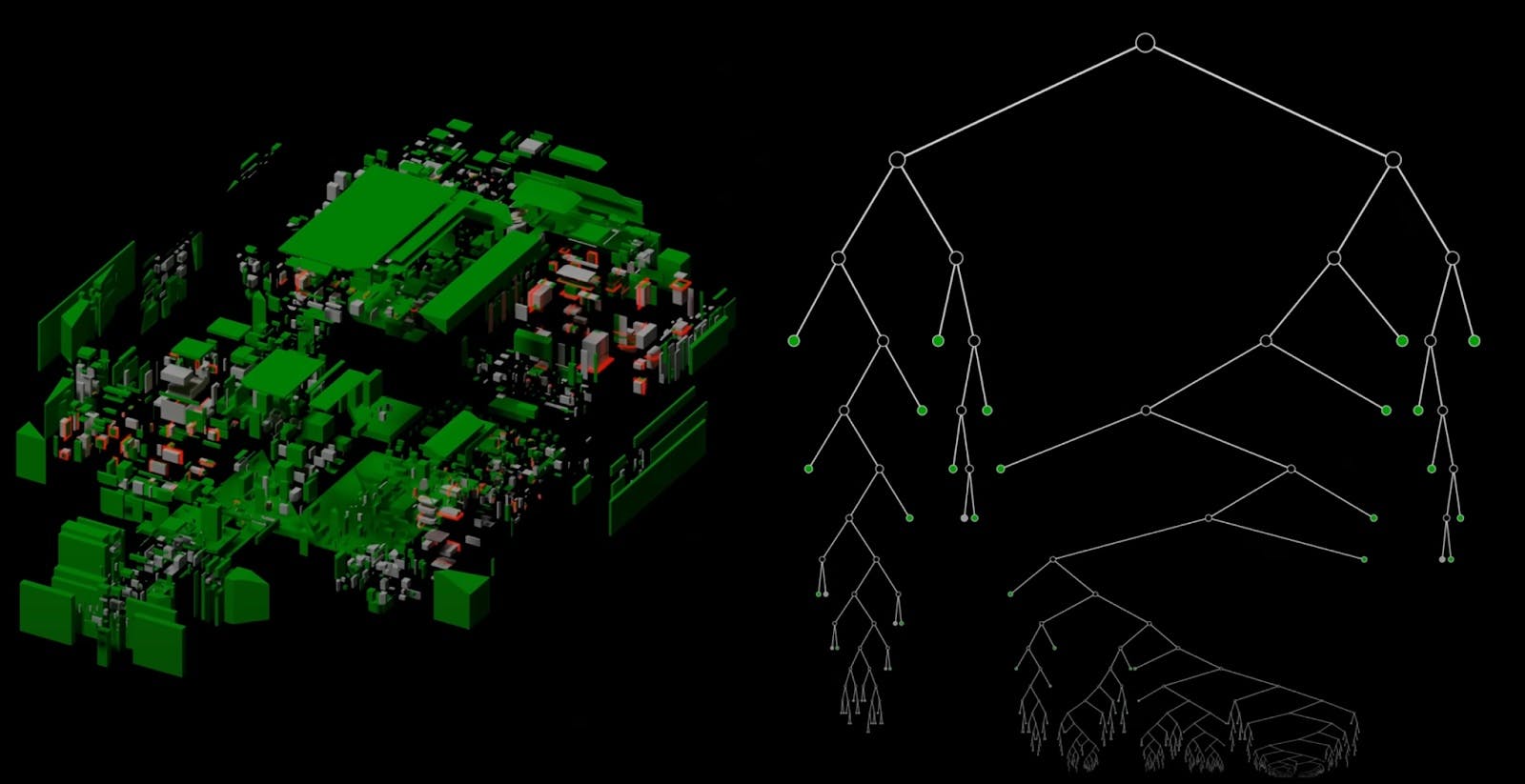

Figuring out how to do these types of calculations efficiently, especially decades ago when hardware was limited by memory and compute, gave rise to some of the cleverest optimization schemes in computer history. One such optimization was devised by the legendary programmer John Carmack in 1996, in building the game Quake.

Carmack figured that rather than checking whether every surface was intersecting with another one, he could split the game space into sections stored in a binary tree. Then, he would only need to check the surfaces closest to active gameplay. When one of those surfaces was impacted, you could easily traverse the tree up and check whether other surfaces nearby should be impacted too. The illustration below shows how Quake represented its first level in a binary space partitioned tree.

Source: Matt’s Ramblings

Even so, this is all only a fraction of the considerations that go into the physics engine. Once you detect a collision, physics systems need to determine how objects respond — whether they fly off, break, or bounce off depending on their weight, friction, or bounciness. The physics simulator renders the mechanics of an entire virtual world, but even this is not the main workhorse of the game engine.

Rendering Engines

By far and away the most computationally intensive subsystem of the game engine is the rendering engine. Simulating how objects look, especially visually complicated three-dimensional ones, is very hard.

The human eye is able to see through a unique process, where the cones in the backs of our eyes detect the frequencies of billions upon billions of photons bouncing around our surroundings and into our retinas. It’s these refractions of a countless number of visible light rays that allow us to see and distinguish light from shadow, thereby making out objects in three dimensions. This process is so computationally intense, in fact, that studies show roughly half of the brain’s cortex is engaged in visual processing.

So, how do you mimic this process on a computer? Especially if that computer has only a couple dozen megabytes of RAM, as most computers did in the early 1990s.

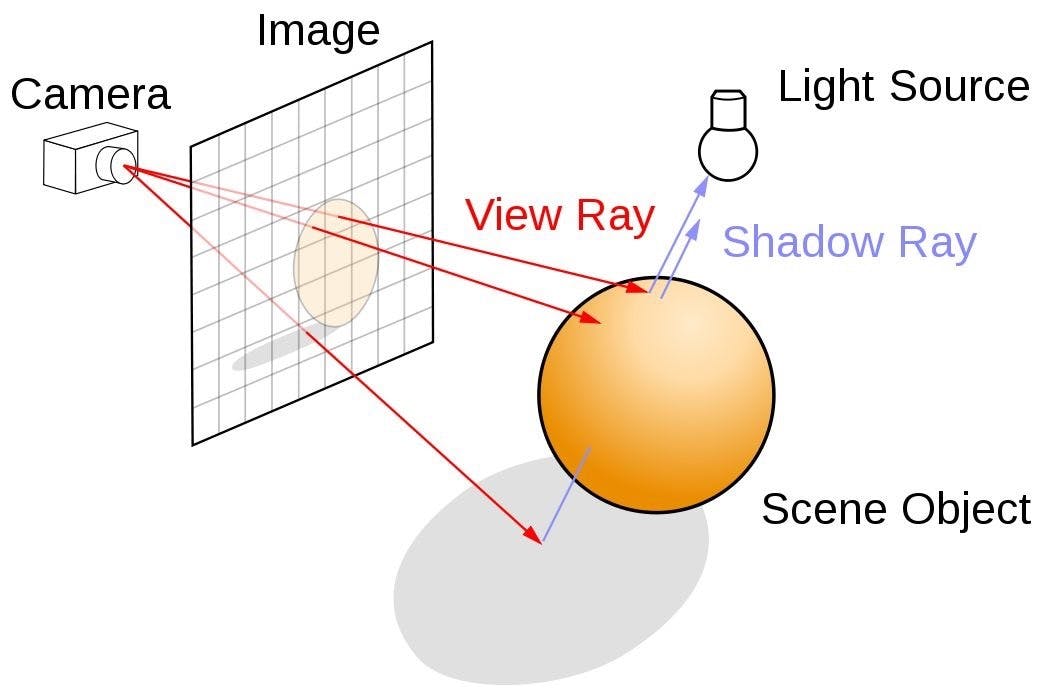

It would be computationally very expensive to copy exactly what light does. That would involve projecting a number of light rays per screen pixel onto your virtualized 3D model and noting how each one bounces from one surface to the next to produce the full range of light effects from highlights, shadows, indirect light, and diffuse light.

Source: NVIDIA

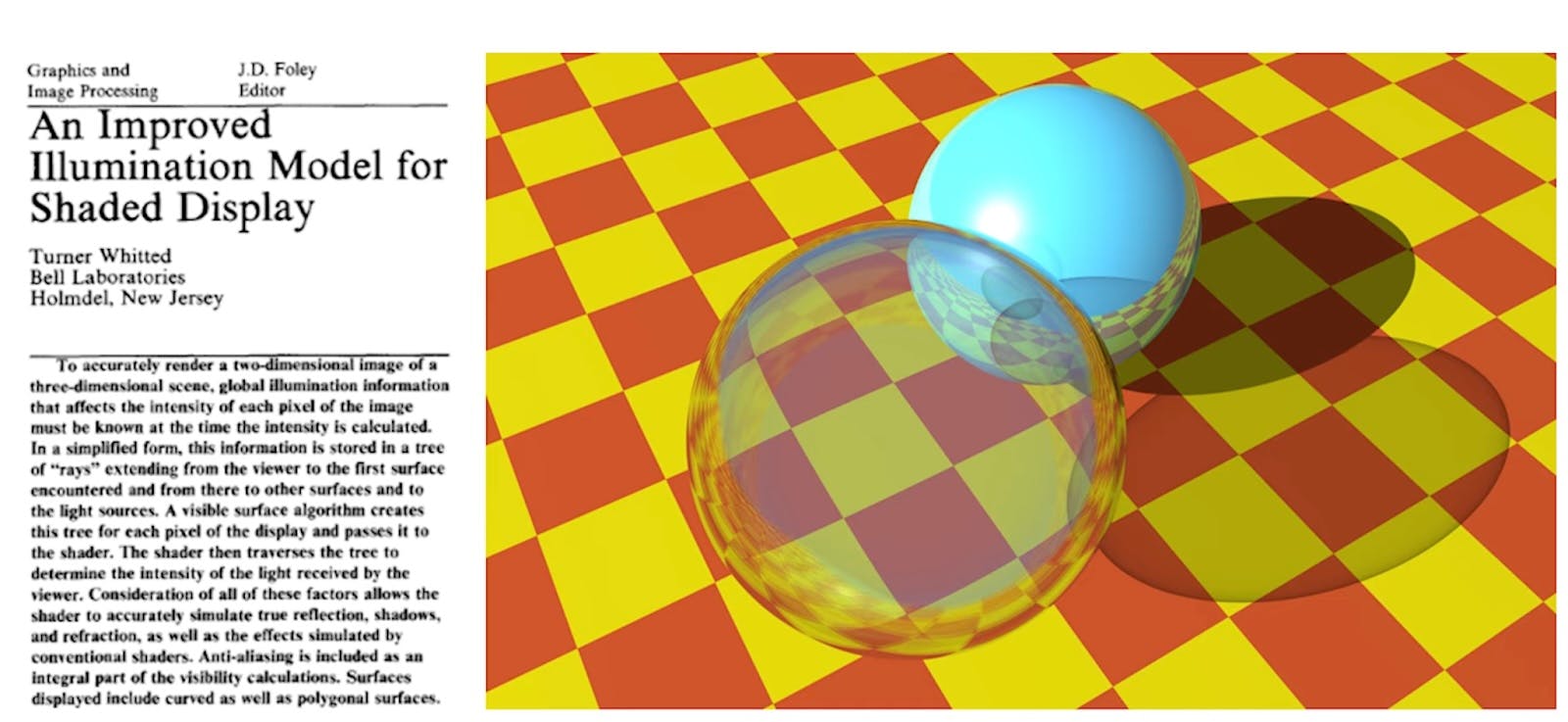

Even so, the algorithms for doing this and producing photorealistic computer graphics were drawn up in the 1980s by graphics pioneers like J. Turner Whitehead. He managed to produce stunning images for his time by having the computer slowly trace the rays one by one to determine exactly how much each screen pixel should be illuminated. In 1980, this method took Whitehead 74 minutes to render the image below.

Source: NVIDIA

Though it confirmed that the theory for photorealistic lighting was there, in practice, the algorithm was nowhere near feasible for games that required real-time rendering.

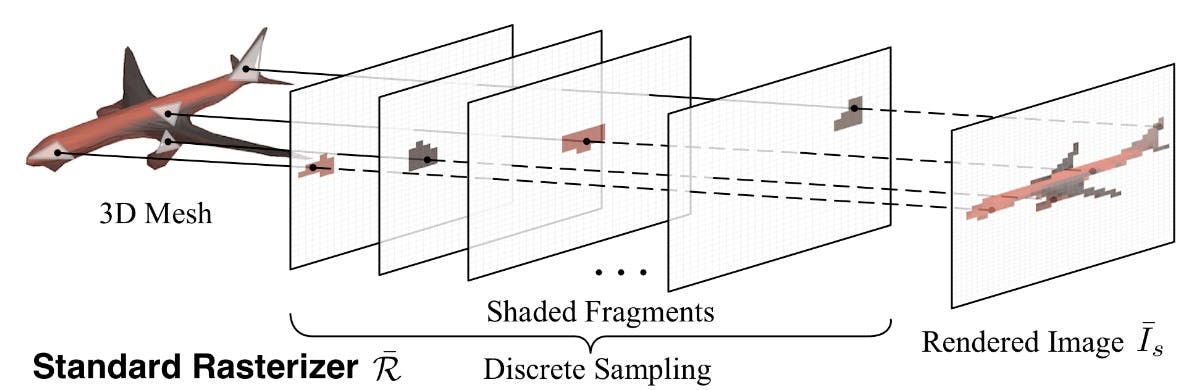

Instead, the graphics industry looked for ways to simplify. Rather than pursuing ray tracing, it developed a method called rasterization. Rasterization was an exercise in perspective projection. Three-dimensional objects in a perspective field were given a measure of depth relative to a virtual camera lens. Then, the rasterization algorithm would determine which pixels to shade in to indicate the presence of a plane and which pixels to leave blank.

Source: USC

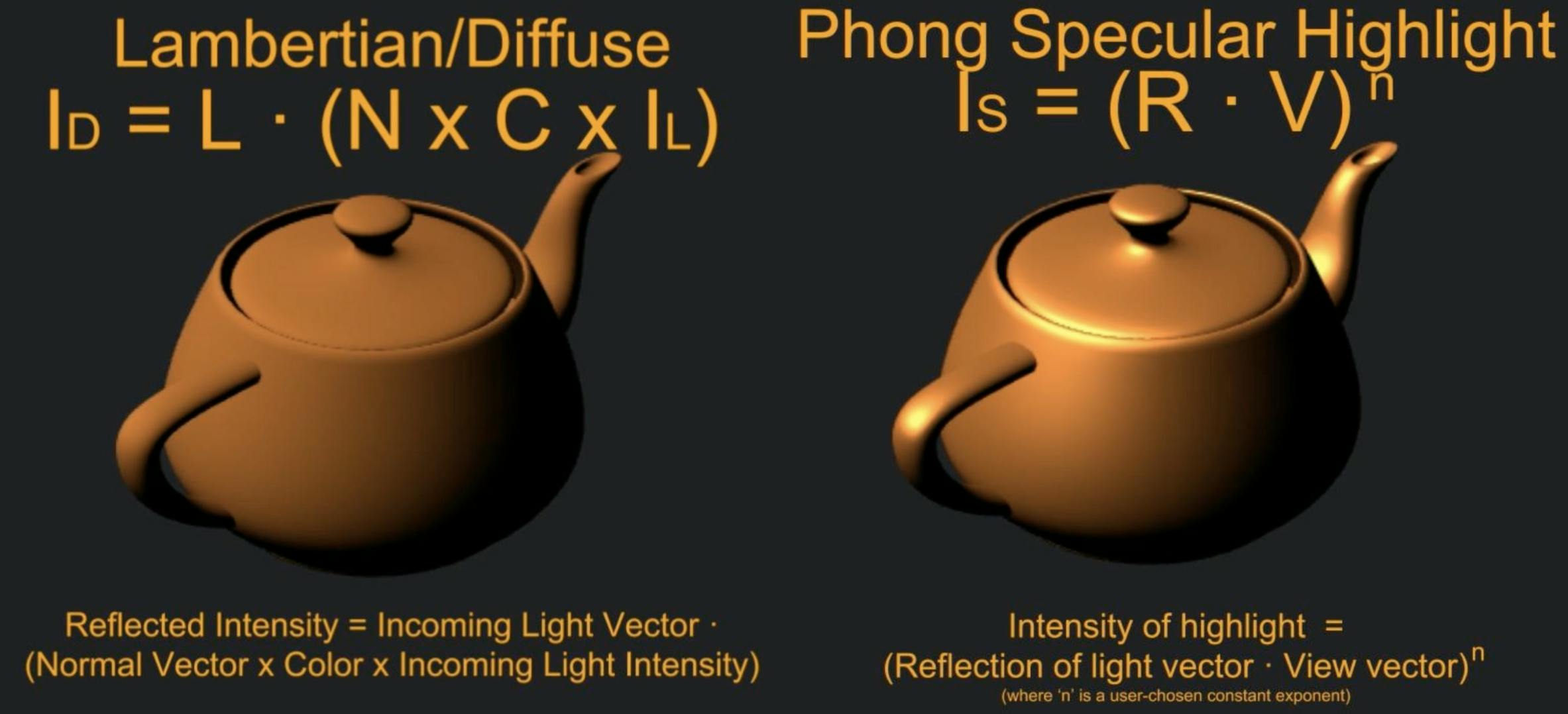

Rasterization was capable of drawing the basic shapes of the object relative to a particular point of view. However, to account for the impact of light and shadows, which help further build out the three-dimensional nature of objects, a series of other clever algorithms were developed to help approximate how light would scatter objects. Techniques like Lambertian shading or Phong shading estimate where highlights and shadows should be added based on the planes of that object and the direction of the light source. These algorithms weren’t calculating individual rays, but rather outputting an approximation of how a lit object should look on average.

Source: HappieCat

Even approximating algorithms like these were still too computationally complex to run in real time in the 1990s. However, those with more time on their hands, like animated movie studios, could employ them to render films. The first successful 3D animated movie was Toy Story, produced in 1995. It was rendered using Pixar’s proprietary rendering engine called RenderMan, which didn’t have ray tracing, and used rasterization techniques at the time. Even so, the film, which had over 114,240 frames, took 800,000 machine hours to render. In total, it took 117 computers running 24 hours per day to render less than 30 seconds of the film per day.

All of this changed for the better with the introduction of graphics cards. Though graphics-specific chips had existed previously, NVIDIA popularized the “graphics processing unit” or GPU, when it released its GeForce 256 chip in 1999.

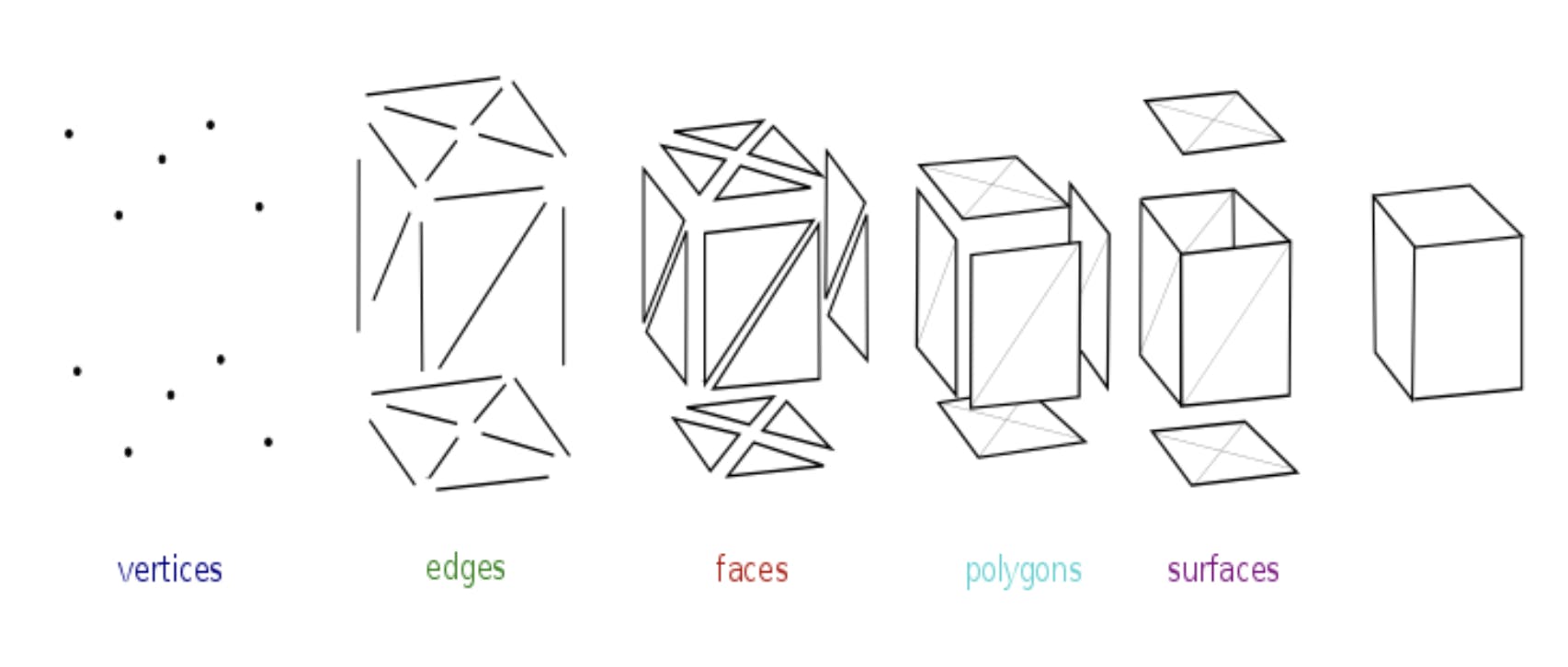

The computations required to do rendering are a series of matrix multiplications. In a computer, 3D objects are modeled from a series of triangles. The vertices of these triangles are encoded in a matrix, which describes the shape of the object. Orienting the object in the game world requires multiplying the object matrix with a matrix of coordinates that describes its location in the virtual game space.

Source: Polygon mesh

From there, perspective projection, which turns the 3D models into a two-dimensional view, requires another series of matrix multiplications, and shading algorithms account for even more matrix multiplication on top of that.

Source: Research Gate

NVIDIA’s GPUs were specifically designed to do exactly these calculations extremely efficiently. Luckily for NVIDIA, it turned out that creating chips optimized to perform matrix multiplication would one day prove incredibly useful for the field of artificial intelligence. It was a happy coincidence.

The proliferation and increasing power of GPUs created a boom in computer graphics. By 2006, Unreal Engine 3 was capable of rendering up to 5,000 visible objects in a frame, which required around 500 GFLOPs, 5 billion floating point operations per second, to do so.

From that point on, the algorithms and math needed to produce true-to-life photorealistic graphics were established. The only thing the graphics and gaming industry needed was more compute.

In 2018, NVIDIA changed the computer graphics industry forever when it released its RTX card, a GPU designed specifically to do ray tracing. GPU hardware was getting so powerful that the earlier algorithms invented to cut corners and simulate the effects of light simply were not needed anymore. Chips were getting so powerful that you could illuminate a scene by literally tracing through the entire refractive pathways of up to four light rays per pixel in real time.

Today, this allows modern computer graphics to achieve stunning levels of photorealism in real time. Renders produced using Unreal Engine 5 can now depict light glimmering in real-time through complex scenes of dense foliage in high definition, which computer-generated renders of humans appear indistinguishable from photos.

Source: TechPowerUp

A Very Brief History of Gaming

It’s truly astonishing just how far gaming capabilities have come since the launch of the first ever computer game, Spacewar, in 1961. It was produced by MIT student Steve Russell, who benefited from the university’s access to one of the first computers on the market, a DEC PDP-1. It took Russell six months to code this two-player shooting game, where each player has command of a spaceship that seeks to obliterate the other. (You can play an emulation of the game here.)

Source: Spacewar!

Though the game was a hit with students and eventually even spread to other universities over the ARPAnet, computing hardware was prohibitively expensive at the time. PDP-1s cost roughly $120,000 each in the 1960s, and sadly, they, alongside Spacewar, were confined to the annals of history.

Instead, it was the legacy of Spacewar that lived on. One of the students who was lucky enough to play Spacewar at a rival university was none other than Nolan Bushnell. Between 1962 and 1968, Bushnell was studying engineering at the University of Utah when he fell in love with the possibilities afforded by computer games. This fascination led Bushnell to start the company Atari in June 1972, which was devoted specifically to making gaming consoles. Atari’s first game, Pong, which required its own hardware to play, was a smash hit in the United States, selling 150,00 units in 1975. By 1977, Atari had released the Atari 2600 console, which could be used to play up to nine games. It brought game console hardware into Americans’ living rooms, and it made Bushnell a millionaire (a fortune he later used to start the Chuck E. Cheese empire, but that’s a story for another time).

Atari’s consoles were such a success that they became a platform for third-party game creators, who began cropping up to code their own games that could run on Atari’s infrastructure. Four of Atari’s game developers left the company in 1979 to form their own game studio, which they called Activision. Atari let Activision make games for the Atari console as long as Atari retained rights to royalties earned from Activision’s sales — a model that’s still common in the game industry today.

Much to the chagrin of console makers in the late 80s, a rather unexpected competitor then entered the gaming landscape — the personal computer. Thanks to the scaling effects of Moore’s Law, computers were getting more powerful year over year, which meant they had more than enough computational power to simply reproduce many of the games that required consoles in the past.

In the wake of this shift, a number of engineers saw the incredible opportunity opening up in gaming and many dove in. One of the most impactful organizations that made this leap was id Software. Like many great early tech companies, the early id Software team was formed in 1991 after the game development team at Softdisk quit.

Led by the brilliant John Carmack, the team produced a number of hits from Commander Keen, a PC-native version of Mario Bros., to the first 3D first-person shooter game called Wolfenstein 3D, which was a revolutionary format at the time and represented an impressive technical achievement. At a time of limited memory and processing speeds, id Software succeeded in creating a fast-paced shooter game played from the perspective of the shooter. Frames changed fluidly as the character moved around the game arena, producing one of the first truly immersive games on the market.

Source: PlayDOSGames

id Software doubled down on the success of its shooter game format and in 1993 produced DOOM. To this day, DOOM and its sequels continue to enjoy an avid fanbase, who recognize its role as the progenitor of classic modern shooters like Modern Warfare, Halo, and more. It was this team of video game pioneers who then cemented their impact on the video game industry when they produced the first ever game engine, called Quake, which they used to build out the Quake series.

Source: The Escapist

The Future of Gaming

Given gaming’s humble origins, it’s stunning to consider how much more realistic and immersive games have gotten and will continue to get. If improvements in hardware are any indication, the coming wave of realism and immersion will be profound.

In 2016, Tim Sweeney, the founder of Epic Games and producer of Unreal Engine, predicted that real-time photorealistic graphics would be achievable in gaming when GPUs could do 40TFLOPS, or 40 trillion floating points operations per second. In 2022, NVIDIA released its most powerful GPU yet — the Hopper — capable of doing 4PFLOPS, four quadrillion floating point operations per second, one hundred thousand times the power Tim Sweeney claimed he’d need for photorealism.

And that’s not even the end of the story. NVIDIA has developed further techniques to improve the performance of game time renders. One of these is called DLSS, or deep learning super sampling technology. Essentially, this technique leverages artificial intelligence to help boost the performance of rendering engines by only requiring the render to compute one out of every eight pixels on the screen. The remaining seven pixels can be inferred by the software.

So, at the same time that the hardware becoming more powerful, new techniques are being released that will supercharge rendering capability that much further. We’re approaching a point where path tracing, a technique that traces the paths of hundreds to thousands of light rays per pixel can be feasible in real time gameplay.

The wrinkle here is that the massive GPUs required are expensive. Most consoles and even the top gaming computers don’t have access to this kind of hardware. This means that many gaming companies are now opting to use cloud-based computing to render real-time gameplay and stream it to players’ consoles or devices. It’s a less decentralized form of gameplay that many avid gamers are growing frustrated with since it means that without an Internet connection, gameplay becomes impossible.

Another fascinating frontier for gaming will be found in virtual reality. In 2023, Apple released the Apple Vision Pro, which represented a significant step forward in the capabilities of virtual reality headsets. Already, game engine producers like Unreal are exploring how its engine might run on the Vision Pro device.

However, hosting games on a fully immersive visual headset still comes with many challenges. Gameplay rendering will need to be refactored to accommodate a headset device that will need to render not one but two views for the player — one for each eye. And though the hardware specs on the Vision Pro are impressive, they are still less powerful than the state-of-the-art technology used to render the photorealistic graphics top gamers are used to. Offline compute for gaming could be a solution to some of these problems, but even so, the move to more constrained hardware specifications will present a new set of very interesting problems for programmers.

Computer games are still a new medium. They’ve only been around since the 1960s, and yet they’ve already had a transformative impact and become the largest source of global entertainment, eclipsing even music and film. Games have also become among one of the most powerful expressive mediums because of the number of variables they can manipulate with respect to player experience: everything from how players experience time, space, images, sounds, and more is at the discretion of the game designer.

We are nowhere near exhausting the full potential of the video game medium, which with more time, and more artistry on the parts of game designers, will take us to even deeper levels of immersion and even more profound virtual experiences. When they do, the gaming industry will lift the tide for the entire industry of computing, as it has done since the very beginning.

Disclosure: Nothing presented within this article is intended to constitute legal, business, investment or tax advice, and under no circumstances should any information provided herein be used or considered as an offer to sell or a solicitation of an offer to buy an interest in any investment fund managed by Contrary LLC (“Contrary”) nor does such information constitute an offer to provide investment advisory services. Information provided reflects Contrary’s views as of a time, whereby such views are subject to change at any point and Contrary shall not be obligated to provide notice of any change. Companies mentioned in this article may be a representative sample of portfolio companies in which Contrary has invested in which the author believes such companies fit the objective criteria stated in commentary, which do not reflect all investments made by Contrary. No assumptions should be made that investments listed above were or will be profitable. Due to various risks and uncertainties, actual events, results or the actual experience may differ materially from those reflected or contemplated in these statements. Nothing contained in this article may be relied upon as a guarantee or assurance as to the future success of any particular company. Past performance is not indicative of future results. A list of investments made by Contrary (excluding investments for which the issuer has not provided permission for Contrary to disclose publicly, Fund of Fund investments and investments in which total invested capital is no more than $50,000) is available at www.contrary.com/investments.

Certain information contained in here has been obtained from third-party sources, including from portfolio companies of funds managed by Contrary. While taken from sources believed to be reliable, Contrary has not independently verified such information and makes no representations about the enduring accuracy of the information or its appropriateness for a given situation. Charts and graphs provided within are for informational purposes solely and should not be relied upon when making any investment decision. Please see www.contrary.com/legal for additional important information.