The ability to perceive objects, discern their dimensions, and contextualize them alongside our relative position in space is a core ingredient in humans’ evolved ability to think, learn, and direct our own actions. For this reason, teaching perception to machines is key to the advancement of both artificial intelligence and robotics.

As I wrote in a past essay, robots can be thought of as computers with a body; a kind of embodied computation. Mechanical bodies have three areas of competency: sensing (computer vision), thinking (AI), and acting (robots themselves). Having covered the Acceleration of Artificial Intelligence and the Rise of Robots in previous posts, it’s now time to turn to the first part of this triad by exploring lidar, which lies on the frontier of computer vision.

Lidar, short for “light detection and ranging” (similar to “radar”, which is an acronym for “radio detection and ranging”), has emerged as a technology that significantly improves perception for both humans and machines. While traditional visual perception relies on capturing light to create 2D images and then infusing them with depth information, lidar captures a 3D model of its environment from the get-go. It does this by shooting tiny laser beams and measuring the time it takes them to reflect off of surfaces to calculate the distance that the laser beams traveled. By shooting hundreds of thousands of little laser beams at a time, lidar can create a fully accurate 3D scan of its surroundings.

Though its core technology has been around for over half a century, it’s only within the previous twenty years that lidar has found its killer app: autonomous driving. The demand from the self-driving industry has transformed lidar from a rarity to an increasingly ubiquitous technology that’s already installed in all modern iPhones.

It unlocked new vistas, allowing us to do everything from measure continental drift, dock spacecraft to the ISS, and discover lost cities. But there’s still a lot of potential for further advancement with lidar, because the economics of scaling haven’t even fully kicked in yet. With the prospect of Moore’s Law-like improvements in precision and cost for lidar technology over the coming years, it’s high time to review the history of lidar and explore its potential impact across the future of industries and consumer goods.

Directing Light

At the core of lidar technology is the ability to control the direction of light. Historically, light mystified philosophers and scientists. It wasn’t at all clear where light actually came from. The Sun produced it, as did fire; but how? Strikes of lightning and electricity seemed to create it too; but why?

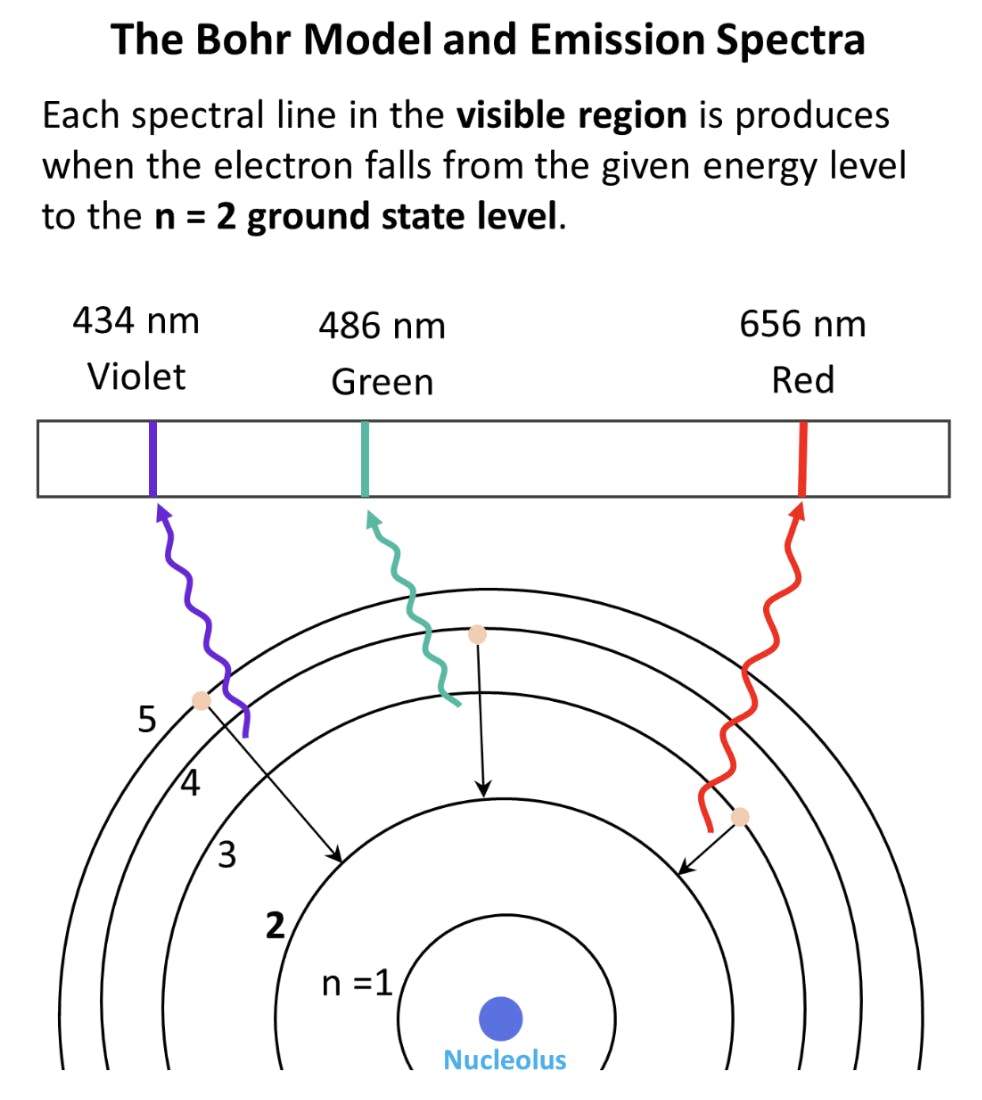

It would take until the First World War for these questions to finally be answered by the independent efforts of Niels Bohr and Albert Einstein. Bohr had been studying the structure of the atom and was conducting spectroscopy experiments where he would take gasses like hydrogen or nitrogen gas and expose them to an electrical current. When the gas started to glow, he would direct the light through a prism to reveal the discrete colors emitted by the gas.

These gasses kept producing the same visible colors. This led Bohr to conclude that atoms must contain electrons at varying discrete energy levels which correspond to specific wavelengths of radiation.

Source: Chemistry Steps

In other words, Bohr noticed that after an atom’s electrons absorbed energy, they released that energy in the form of electromagnetic radiation, or light. The exact wavelength of light they released depended on the energy level of the electrons.

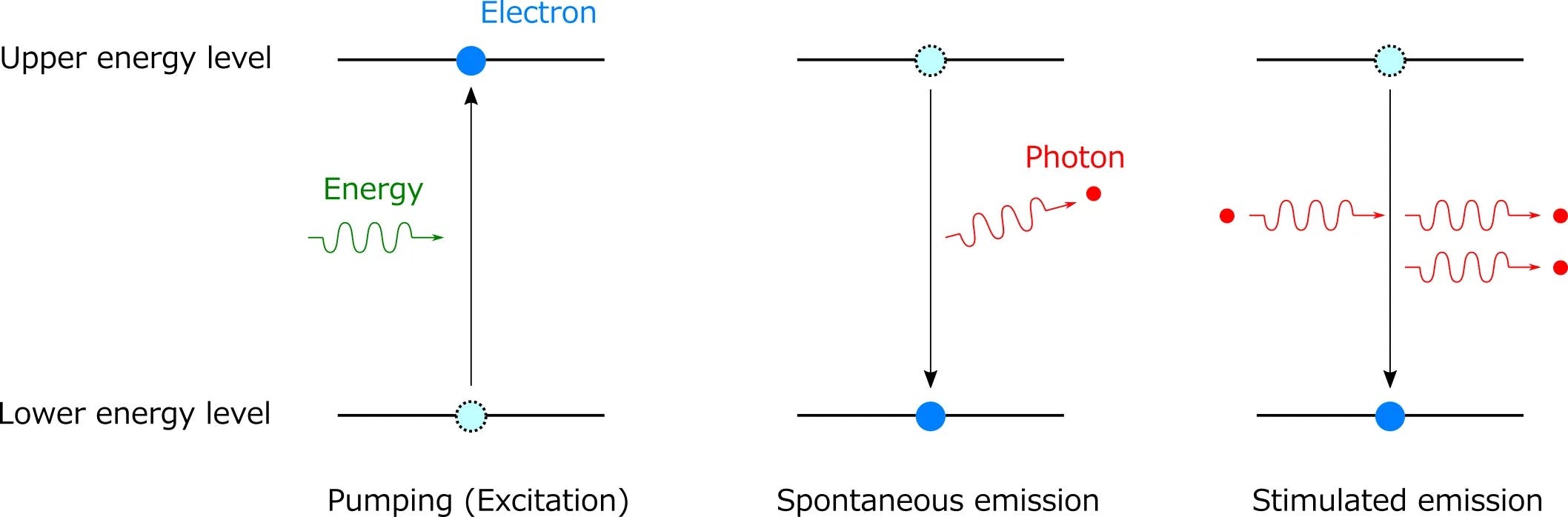

A few years later, building off of Bohr’s work in 1917, Albert Einstein developed a theory called “spontaneous emission” which described exactly how an atom’s electrons produce light.

According to Einstein, electrons absorb energy from photons to get to higher energy states. When the electrons return to their original ground energy state, they release the initial photon in some random direction as electromagnetic radiation or light. The wavelength of the photon released is equal to the energy differential between the higher energy state and the ground state.

Source: Fiber Labs

In that same paper, Einstein also wondered what might happen if you kept bombarding an electron in an elevated energy state with more photons. Einstein predicted that if you kept shooting photons at an already excited electron, the photons it would emit would not radiate out randomly, but rather move in the same direction as the photons shot at it. Furthermore, the rate of overall photon emission would be amplified due to its already excited state! The theoretical result would be an amplified light beam traveling in the same direction. He called this process “stimulated emission.”

Spontaneous emission describes the orb-like glow we normally experience with visible light as it shoots out in random directions, but stimulated emission imagined what it might take to get light waves to move neatly in the same direction.

What’s remarkable is that Einstein predicted this behavior from a classical mechanics perspective. The real reason for photons moving in the same direction under stimulated emission is actually explained today by the very complicated laws and mathematics in quantum field theory. How exactly, lacking the tools of quantum mechanics, Einstein was able to accurately describe the supremely counterintuitive behavior of stimulated photon emission is beyond us. In any case, his prescient ideas gave us the clues to how we might one day be able to control light for our own devices.

The Birth of the Laser

Designing a device to test out Einstein’s ideas of stimulated emission would take almost four more decades. In 1953, Charles Townes, James P. Gordon, and Herbert J. Zeiger were the first to validate the theory of stimulated emission by building a device called a “maser,” short for “microwave amplification by stimulated emission of radiation” which excited a column of ammonia gas to emit microwaves in a parallel direction.

There was a great deal of interest in learning to direct electromagnetic waves at specific targets after the invention of radars during World War II, which were deployed to great effect on the battlefield. Radar designs at the time were simple and effective. A basic radio antenna produced a stream of radio waves radiating outward. When the waves hit a reflective object, they would reverberate back in the direction of the antenna, and eventually be picked up by a receiver. The receiver measured how much time it took for them to travel from the reflective surface, revealing the distance to the object. It turned out that radio waves could bounce off of metal surfaces, which proved rather useful when trying to do covert reconnaissance or detecting the presence of enemy vehicles on a battlefield.

The problem with radio waves was their giant size. Radio wavelengths could span kilometers, which didn’t provide much precision as to the precise coordinates of an object detected by radar. As a result, there was a mad post-war dash to fund research on electro-optics and range detectors which used smaller wavelengths to detect objects with greater accuracy. The invention of the maser eventually won Townes the Nobel Prize in Physics, but it was just a stepping stone to an invention that would truly change the world forever — the laser.

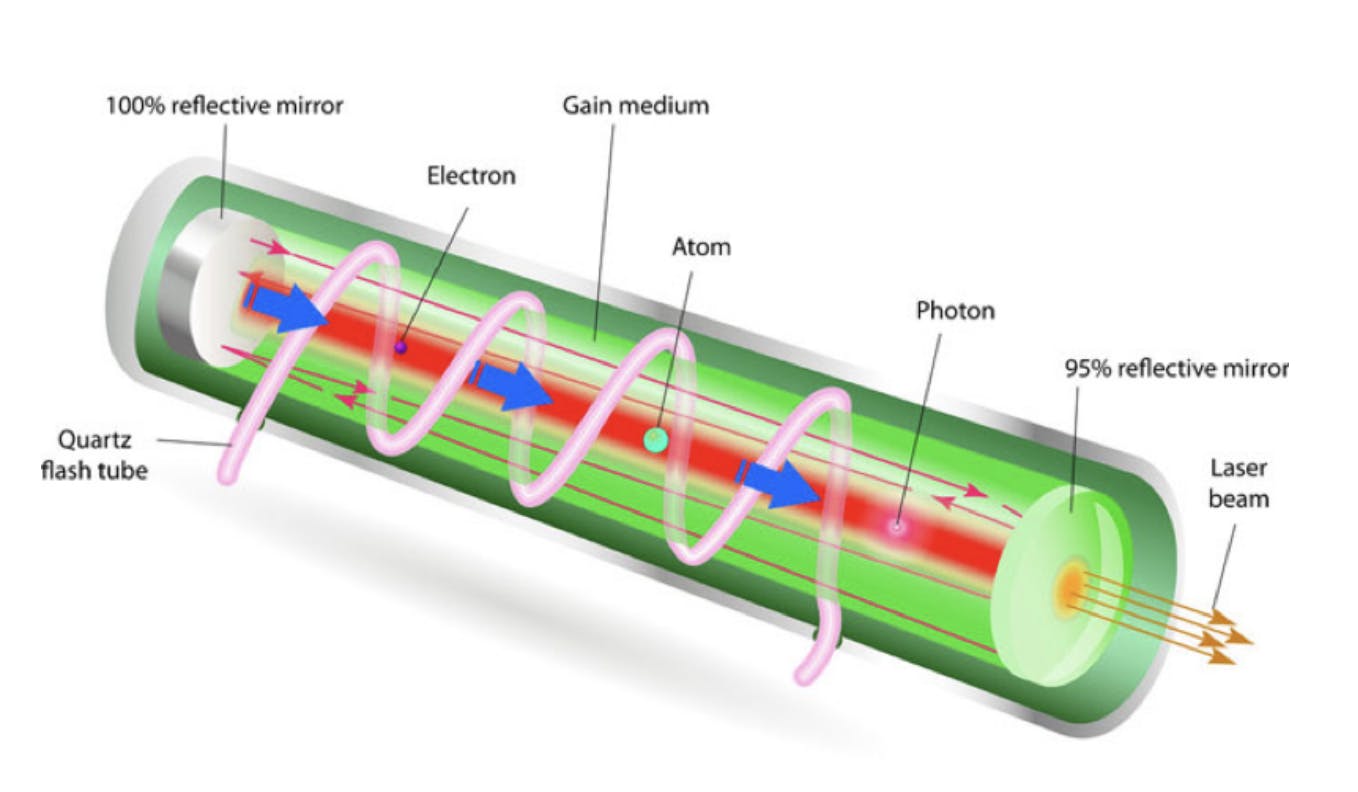

Townes and his colleagues tried for years to develop a functioning laser through stimulated emission, but it was ultimately a Stanford physics graduate named Theodore Maiman, working his first job at Hughes Aerospace in Culver City, who figured out the trick in 1961.

Maiman, who had been studying the use of crystals in optics, got the idea to use a synthetic ruby crystal and coil it with a flash tube that would excite the electrons in the ruby crystal. The entire contraption was book-ended by mirrors, one of which held a tiny opening. When Maiman shot a stream of photons through the device, a concentrated beam of ruby red light came out the other end. Thus; the laser was born.

Source: Science4Fun

Immediately after Maiman invented the laser, his employer, Hughes Aerospace, began investing in commercializing the technology. Its first product was an early lidar-like tool called the “Colidar,” built in 1963, which stood for “coherent light detection and ranging.” It operated like a highly precise range-finder. In military scenarios, these were used by combatants to measure distance to objects of interest to determine if they were within artillery range. The Colidar was capable of shooting a beam of light up to 7 miles out, and had a receiver able to detect objects with an accuracy of up to 15 feet.

Source: Photon Lexicon

All through the sixties, laser technology was improving. The sixties was a decade of incredible progress in electronics, leading engineers to experiment with producing all kinds of new lasers. Gas lasers and even semiconductor lasers quickly became a reality, eventually leading the way new applications including spectroscopy, laser surgery, and even industrial applications like cutting and welding with light.

As lasers got more powerful and peacetime set in, the public began seeing the utility of laser sensing and detection in other contexts. The first lunar laser ranging experiments were conducted at MIT in 1962, which shot laser beams at the moon to determine its distance from the Earth. By the 70s, laser altimeters were standard in the toolkits taken by astronauts to the moon across the Apollo missions.

The development of faster-pulsing lasers throughout the nineties made it possible to use laser light to sweep across an entire horizon, rather than just targeting one specific point. By rotating the lasers through mechanical motion, lidar systems could detect objects of all sizes across a full horizon. When mounted on planes, lidar systems were able to produce some of the first topographical maps of the Earth’s surface. In 1996, the Mars Orbiter Laser Altimeter, or MOLA, was sent to orbit around Mars, and after a period of four and a half years, succeeded in completing a full 3D scan of the planet.

Though these were impressive achievements, lidar systems were few and far between. Most were housed in universities or commissioned by massive government agencies. The appeal of lidar was initially its military applications. However, with the influx of GPS and other kinds of communications technologies, lidar lost its killer use-case. The technology then stagnated and was largely forgotten about until about 2004, when things changed once again.

Lidar’s Killer App

In 2004, DARPA, the Defense Department’s Research arm, hosted its first “Grand Challenge,” a competition meant to encourage the development of autonomous vehicles. The US Congress authorized the challenge with the hope that by 2015, a third of the army’s ground forces would be replaced by autonomous machines. DARPA structured the Grand Challenge as a race. The first self-driving car to complete a 150 mile route in the Mojave Desert would win $1 million. However, the outcome was very disappointing. None of the teams finished. The best performance was by Carnegie Mellon’s autonomous car, which managed to get 11 miles before hitting a rock and stopping. No prize money was awarded.

The biggest problem with self-driving was getting the car to perceive the route and obstacles along it. Computer vision was a difficult problem AI researchers across the country were struggling to solve. Computers could begin to recognize discrete objects from an image, but discerning depth of field from a two-dimensional canvas was a really challenging problem. It seemed that nothing less than a fully-functioning brain would be capable of sophisticated enough perception for autonomous vehicles. With only a few months to go before the 2005 Grand Challenge, the teams figured that something needed to change. Rather than using cameras for vision, the Stanford team decided to place a lidar detector on top of its car.

Cameras see color, but lidar sees distance. With a lidar system, computers could see in all three dimensions. . It was a brilliant idea with a big reward at the end. That year, five cars completed the track, but it was Stanford’s lidar-enabled car “Stanley” that got the fastest time — six hours and fifty four minutes. Stanford’s team won a prize of $2 million, and the advantage of lidar for self-driving was cemented in history forevermore.

Source: Smithsonian Magazine

Since then, numerous self-driving startups have spawned, jumping at the opportunity to accelerate the capability with the brilliant help of lidar technology. The only problem was that there was no off-the-shelf lidar product they could buy to get started. Most lidar systems were terribly expensive and clunky. To create a 360-degree field of view, a laser emitter and receiver had to be mounted on top of the car, and rotated mechanically.

Velodyne was one of the main manufacturers of such systems, which they sold for $75,000 a piece prior to 2017. Major self-driving operations from Alphabet’s Waymo to Caterpillar and Ford Motors outfitted their cars with Velodyne’s five figure spinning lidar systems. These were complete with 64 spinning lasers and capable of generating hundreds of thousands of data points per second at a range of up to 200 meters.

The early days of self-driving were odd in that way. The sensors cost more than the cars themselves, and just because the lidar could produce a three-dimensional “point cloud” representing a field of vision didn’t mean that there was mature software capable of classifying the objects in the line of sight. The situation led to Elon Musk making his now-famous statement that “anyone relying on LIDAR is doomed.”

As a result, Tesla decided to go in another direction than lidar for its own autonomous efforts. In 2014, Tesla retrofitted its Model S cars with 360-degree cameras as part of its new advanced driver-assisted features, which had the additional benefit of logging driving data to continue training its camera-based self-driving model.

After this, the lidar battle lines were drawn. On the other side of the schism, teams were doubling down on lidar. By 2017, after seven years of tinkering, Alphabet’s Waymo had begun producing its own lidar systems in-house, and achieved remarkable cost savings. Its home-made systems ended up costing only $7,500 to produce, a 10x improvement from just a few years ago (but still more expensive than Tesla’s cameras).

Though the exact design of Waymo’s sensors is not fully known, Alphabet’s team likely had many novel options to choose from as a range of cheaper, and more energy efficient lidar systems had emerged following Stanley’s success in the 2005 Grand Challenge.

In particular, three approaches towered over Velodyne’s approach to lidar:

1. The microelectromechanical mirrors (MEMs) approach: Rather than spinning lasers around, this approach fires the laser beam onto a tiny mirror that spins, allowing the laser to capture the full horizontal field of view. This is essentially a miniaturized version of the classic lidar system. Another tiny mirror can be added to redirect the laser beam along a vertical orientation, too, but once more moving parts are added, the complexity of the system begins to grow. Smaller size and cost are convenient here, but having any kind of moving parts inevitably exposes your system to wear-and-tear. Mirrors are also prone to shock and vibrations from natural car movement, which could hinder performance.

2. Flash Lidar: Rather than emitting hundreds of thousands to millions of tiny beams of laser light in all directions, flash lidar floods the entire field of view with one large laser pulse. It yields a more compact system but requires a pretty powerful burst of light. The power of the light is limited by the fact that this light can’t be blinding to human eyes, which unfortunately limits the detection range on these systems. Currently, the detectors for eye-safe flash lidar are still rather pricey, which is another hiccup with this option. At the moment, flash lidar systems are only truly appropriate on larger systems like planes conducting aerial surveys.

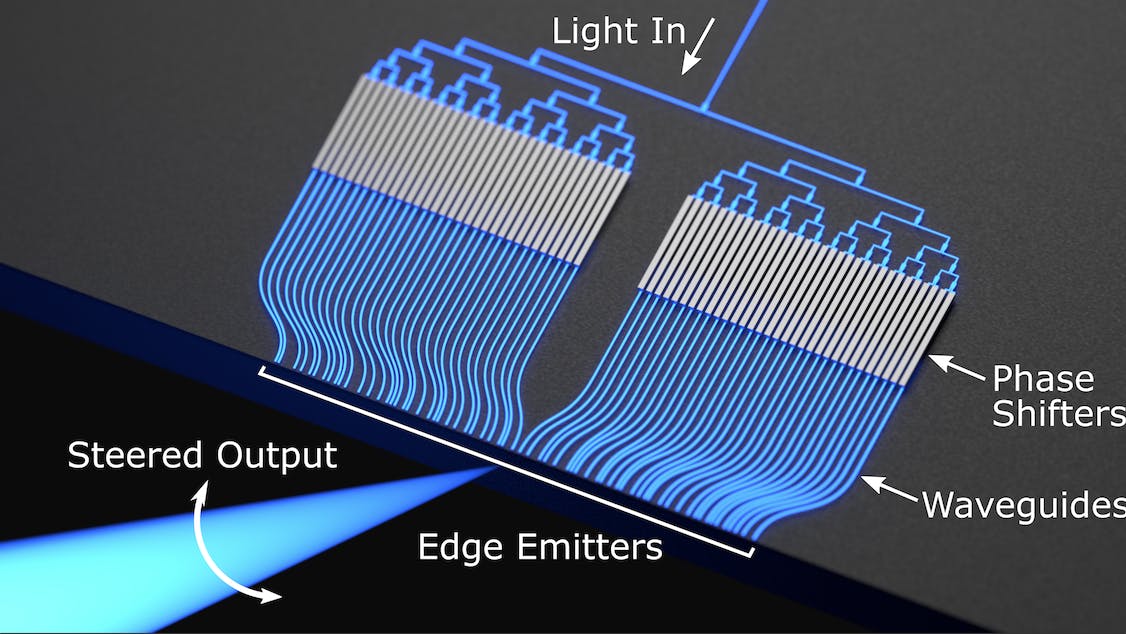

3. Optical Phased Arrays: A full-lidar system with no moving parts and no soldering required is one of the most exciting and promising new system designs on the market. This is the future promised by optical phased arrays. Based on the calibration of phase shifters, which are controlled electronically, a single laser beam can be quickly directed to scan a wide field of view. The beams can be steered on a horizontal and vertical axis all without any moving parts. To do this, millions of array elements are required, which need to be placed microns apart. Although this sounds rather challenging, with contemporary photolithography techniques developed by the microchip industry, the technology to do this has already been mastered. For this reason, optical phased arrays printed on silicon wafers are being called “optics on a chips.” Just as microchips had their era of Moore’s Law scaling, many foresee the same happening for these miniaturized lidar systems. As the scale of manufacturing increases, the costs of these chips and lidar systems will decrease dramatically.

Source: Columbia University

The Future of Lidar

Lidar technology has emerged from the shadows of obscurity to claim its well-deserved moment in the spotlight. In his book “The Laser That’s Changing the World,” Todd Neff calls Lidar a “most striking macroscopic application of quantum physics.” The ability to laser scan our physical surroundings have already enabled us to do extraordinary things.

Powerful, airborne lidar systems can detect sea levels and melting glaciers, and can produce scans of entire planets. Such systems give us the ability to assess the terrain of the Earth from above even under dense tree cover. One recent application of this has been uncovering lost ancient cities, like one discovered in May 2022 hidden deep in the Amazon forest, imperceptible to satellites or even passersby, but incapable of fooling the eyes of lidar drones.

Lidar is used to measure distance in space. Today, it already assists SpaceX’s Dragon capsule to navigate and dock with the International Space Station. One day, its applications in space might be even more expansive. One startup called Nuview, for example, is interested in launching a fleet of satellite-borne lidar systems to create a full 3D scan of planet Earth, akin to the model we already have of Mars.

Many might also be surprised to hear that lidar is already installed on most of our phones. All iPhones past the model 12 have been installed with a miniaturized lidar system capable of creating 3D scans at a range of up to 5 meters. Smaller range lidar capabilities are easier problems to solve than larger systems that need to negotiate large ranges, quick point processing, all with eye-safe laser beams. Sony is Apple’s present lidar system vendor, and is already mass manufacturing a suite of small lidar systems that can be added to any phone or device.

Lidar is one of those technologies that we have all the components and technical know-how to produce, but delayed doing so at scale because the economic incentive to do so was missing. Over the previous decade, the pursuit of autonomous driving has acted as a catalyst for the rapid advancement of lidar technology, and since then lidar development has unleashed a wave of innovation, reminiscent of Moore's Law scaling effects, with continuous improvements in sensor accuracy, range, and affordability.

Looking to the future, lidar has the potential to become an indispensable feature in wearable devices for professions such as police officers and firefighters. Equipping professionals with wearable lidar technology can enhance their situational awareness, enabling them to navigate complex and hazardous environments with greater precision and safety.

Lidar may also extend to the realm of motion capture, where the technology's ability to capture fluid, real-time 3D motion has the potential to massively decrease costs in the entertainment industry. Instead of relying on cumbersome and time-consuming methods such as actors wearing motion-capture “mocap” suits, lidar can seamlessly record and digitize human movements, leading to more efficient and realistic CGI-enhanced videos and animations.

Source: VFX Voice

Real-time monitoring can also lead to new applications in construction and engineering. The ability to capture and analyze construction progress in real time would mean better project management, on-the-fly adjustments, and a more comprehensive understanding of structural integrity. In the fields of manufacturing, lidar's fast 3D scanning capabilities can significantly reduce manufacturing times by facilitating quick and precise scans of components or component models, letting you go from 3D scanning to 3D printing within a few minutes.

And of course, lidar is already playing an important role in enhancing robotic perception. With the integration of lidar sensors, robots can swiftly and accurately recognize and understand their environment in three dimensions, enabling them to operate autonomously and navigate complex surroundings with greater efficiency.

As lidar technology continues to advance and find its footing in an array of applications, it is evident that its transformative potential reaches far beyond its original conception. The convergence of lidar with various disciplines promises a future of precision, efficiency, and discovery.

Disclosure: Nothing presented within this article is intended to constitute legal, business, investment or tax advice, and under no circumstances should any information provided herein be used or considered as an offer to sell or a solicitation of an offer to buy an interest in any investment fund managed by Contrary LLC (“Contrary”) nor does such information constitute an offer to provide investment advisory services. Information provided reflects Contrary’s views as of a time, whereby such views are subject to change at any point and Contrary shall not be obligated to provide notice of any change. Companies mentioned in this article may be a representative sample of portfolio companies in which Contrary has invested in which the author believes such companies fit the objective criteria stated in commentary, which do not reflect all investments made by Contrary. No assumptions should be made that investments listed above were or will be profitable. Due to various risks and uncertainties, actual events, results or the actual experience may differ materially from those reflected or contemplated in these statements. Nothing contained in this article may be relied upon as a guarantee or assurance as to the future success of any particular company. Past performance is not indicative of future results. A list of investments made by Contrary (excluding investments for which the issuer has not provided permission for Contrary to disclose publicly, Fund of Fund investments and investments in which total invested capital is no more than $50,000) is available at www.contrary.com/investments.

Certain information contained in here has been obtained from third-party sources, including from portfolio companies of funds managed by Contrary. While taken from sources believed to be reliable, Contrary has not independently verified such information and makes no representations about the enduring accuracy of the information or its appropriateness for a given situation. Charts and graphs provided within are for informational purposes solely and should not be relied upon when making any investment decision. Please see www.contrary.com/legal for additional important information.