Ever since Isaac Asimov coined the term “robotics”, we’ve been bracing ourselves for a world where robots are everywhere. The Jetsons had us expecting Rosie the Robot would be taking care of all our household chores. The reality turned out to be starkly different.

Rather than robots living among us, the vast majority of robots we do have are mostly hidden from view. They are sheltered in factories where they toil away screwing caps onto bottles or sorting components.

Though automation is increasing across every aspect of life, we don’t yet trust robots to act fully outside of our supervision. In the most crucial situations, robots act as extensions of ourselves. Remote-controlled drones, interplanetary rovers, disaster relief bots, and even surgical robots allow us to operate in dangerous or critical environments with more accuracy. However, even the cute delivery robots that scoot alongside us on sidewalks are, more often than not, human-operated.

The greatest challenge within the field of robotics thus far has been getting robots to think for themselves and make decisions in dynamic environments where they have to deal with uncertainty. Though progress on this front has stalled for decades, recent breakthroughs in AI driven by success with large language models are re-invigorating research in novel robot training approaches, signaling a possible new spring in robotics research.

Robotic Primitives

The main thing distinguishing a computer from a robot is the fact that robots can act upon the physical world. Robots are computers with a body, a kind of embodied computation.

To interact with the world, all robots must have three main competencies; sensing, thinking, and acting. As a result, the field of robotics itself can actually be subdivided across these three main buckets.

Sensing

Sensing lies on a spectrum of complexity. The simpler side of this spectrum involves tactile mechanisms like switches that go off when they bump into an object or proximity sensors that detect the presence or absence of an object. These cheap and light mechanisms are widely used in smaller robot systems like the Roomba, for example.

However, for decades, researchers have striven to give robots more sophisticated methods of perception. For instance, the field of artificial intelligence began with efforts to get computers to develop visual perception and recognize images. For a long time, such systems of computer vision were only capable of “seeing” in two dimensions. However, with the development of light detection and ranging (LIDAR) and radio detection and ranging (RADAR) in the early 2000s, top-of-the-line systems are now capable of seeing in 3D just like us.

While vision has been the most complicated sensory faculty to mimic in computers, everything from sound to touch – and now even smell – are also being actively explored.

Thinking

Thinking is one of the outstanding faculties that we have yet to get right. The field of robotics has had tremendous success with the physical engineering side of robotics, particularly in cases where the robots were hard-coded to perform repetitive, highly predictable tasks. Teaching robots to behave appropriately in dynamic and uncertain environments, on the other hand, has always been a very difficult undertaking. Part of the challenge can be chalked up to the fact that physical activity is very complicated. Though most of us don’t consciously think about it, standing on our own two feet involves a careful balancing act, shifting around our weight in line with the signals from our vestibular system which orients our body in space. Gary Zabel, a lecturer at UMass, writes, “It requires more neurological resources in terms of sheer brain mass to walk on two legs than it does to play chess or prove mathematical theorems, both of which computers have been doing since the 1950s.”

If you want a visual guide to the trials and tribulations of getting physical behavior right, just take a look at this compilation of robots losing their battles against gravity.

Acting

Actuators create the physical form factors of robots. Their role is to convert energy into mechanical force.

The design of actuators determines what robots can do and how they do it. Actuation is also where the challenges that set robotics apart from traditional computer science become immediately apparent. As soon as you settle on a physical form, everything that your robot can do must conform to the constraints of its actuator design. This is why it’s more straightforward to design arms for robots that do well-specified and repetitive tasks, versus arms for robots expected to operate in a general fashion.

The fundamental components of actuation are the engineering primitives that combine to produce a machine capable of the desired movements. When designing a robotic arm or mechanism, engineers can choose between using electric motors (useful for smaller, less energy-intensive applications), hydraulic actuators (which use pressurized fluid to produce a great amount of force and are often used in industrial settings), or pneumatic actuators (which use compressed air to produce movements required for quick, precise activities).

Design of a robot’s physical components, and the nature of the materials they use, are often biologically inspired. Take for instance the Gecko robot that can scale windows, or the robot arm that elongates and tenses up just like a human muscle.

Material design also plays a big role in creating new robotic primitives. Some examples include the research being conducted on shape memory alloys or piezoelectric actuators, which are both materials that can change shape when exposed to heat or electrical current, and can allow for incredibly precise movements.

A Taxonomy of Robots

The combined abilities to sense, think (however primitively), and actuate takes you most of the way toward making any kind of robot you want. Robots already operate in the air, in space, and underwater. They are used in everything from disaster response to entertainment and even medicine. Though there are many specific kinds of possible robots, the two biggest and most influential categories are industrial robots and autonomous mobile robots.

Industrial Robots

Industrial robots are designed to perform a range of tasks that are too difficult, dangerous, or repetitive for humans like lifting extreme weights, working in extreme temperatures, or manufacturing with extreme precision. However, such robots are typically built for operation in highly-structured environments with very few unknowns.

In order to be produced more easily off-the-shelf, industrial robot form factors usually come in one of four major designs; articulated arms, parallel arms, cartesian arms, and selective compliance assembly robot arms (SCARA arms). Each of these can then be configured to perform the exact task you want them to — albeit at quite a cost.

Source: McKinsey

Not only is industrial robot configuration expensive, it’s rather involved. There are robot parts suppliers like ABB, KUKA, Toshiba, EPSON and so on, but these companies just specialize on the basic hardware. The robotic arms they produce are like a drill with no drill bit. The drill bit determines what the drill could ultimately be used for.

With industrial robotics, the drill bit part is called the “end effector,” and these are typically designed to handle a specific task. However, finding an end effector is not the end of the story. There’s all kinds of other set ups that industrial robots need. Basically, the entire factory usually ends up being designed around the industrial robots it uses.

As Dmitriy Slepov, co-founder of Tibbo Technology, put it: “Robots can’t (yet) run to the warehouse and cart back a bunch of parts. Your robot is like a master craftsman sitting in the middle of a studio. Everything must be brought to it. For small parts, such as screws, you will need to install “screw presenters” — machines that “offer” screws in the right orientation. Larger parts will need to come on conveyor belts or some other means of transportation”.

Robots often need to be retrofitted with additional sensing systems, and must also have adequate guardrails set up around them so as to not endanger any humans. That’s a lot of steps involved in achieving factory automation, and a lot of expertise is required to accomplish this. That’s why there’s a market that exists just for configuring industrial robots to factories. It’s an industry called system integration, with big players like CHL Systems which specializes in manufacturing systems for goods and FANUC North America which specializes in designing factory floors for car manufacturing.

After all that, you’re still left with the hard truth that none of these robots can think for themselves. All of their actions and abilities will need to be hard coded into them. This means that all the work required to set up an automated manufacturing process has to be thrown out the window in the event that a change to any part of the process is needed. An entirely new system will need to be designed when this happens. Robots’ inability to switch between tasks easily makes massive investments in automated systems like these quite risky.

Unlike robots, humans can switch tasks pretty easily and adapt to new constraints and environments almost instantaneously. In a dynamic factory setting, this is the reason that human labor is currently still much more effective than robot labor – it is more easily and more cheaply reconfigurable. One way to take advantage of human nimbleness and robotic precision and power is through collaborative control, in other words, using robots that are partly operated by humans. Unsurprisingly, collaborative robots are the most commonly ordered robots in industrial settings.

Autonomous Mobile Robots

As exciting as industrial robots are, if you’ve ever seen Boston Dynamics’ robot dog, Spot, climb a flight of stairs, or watched their bipedal robot, Atlas, do parkour, you know that this is where modern robotics gets entertaining. The holy grail of robotics research is to get robots to move and think on their own.

Robots like Spot and Atlas can move on their own. In fact, these specimens have gotten very good at basic locomotion and balance whether on four legs or on two. Yet though they’ve been designed to traverse varied kinds of terrain and perform many sorts of complex movements, they still struggle with navigating in unfamiliar environments.

Another major branch of autonomous robotics is in self-driving cars. This technology has shown tremendous progress over the past few years, particularly because of the number of cars on the roads capable of recording driving behavior to train autonomous models the elements of good driving. However, determining what ‘good driving’ really means itself remains an unresolved challenge. For instance, is an autonomous vehicle that’s as good as a human driver enough, or would we want an autonomous vehicle that is substantially better than a human? From this perspective, we’re still likely a ways away from having fully autonomous self-driving cars on our roads. Even other types of seemingly autonomous robots, like Mars rovers or even cute sidewalk delivery bots, are, for the most part, still human-operated.

A Brief History of Robotics

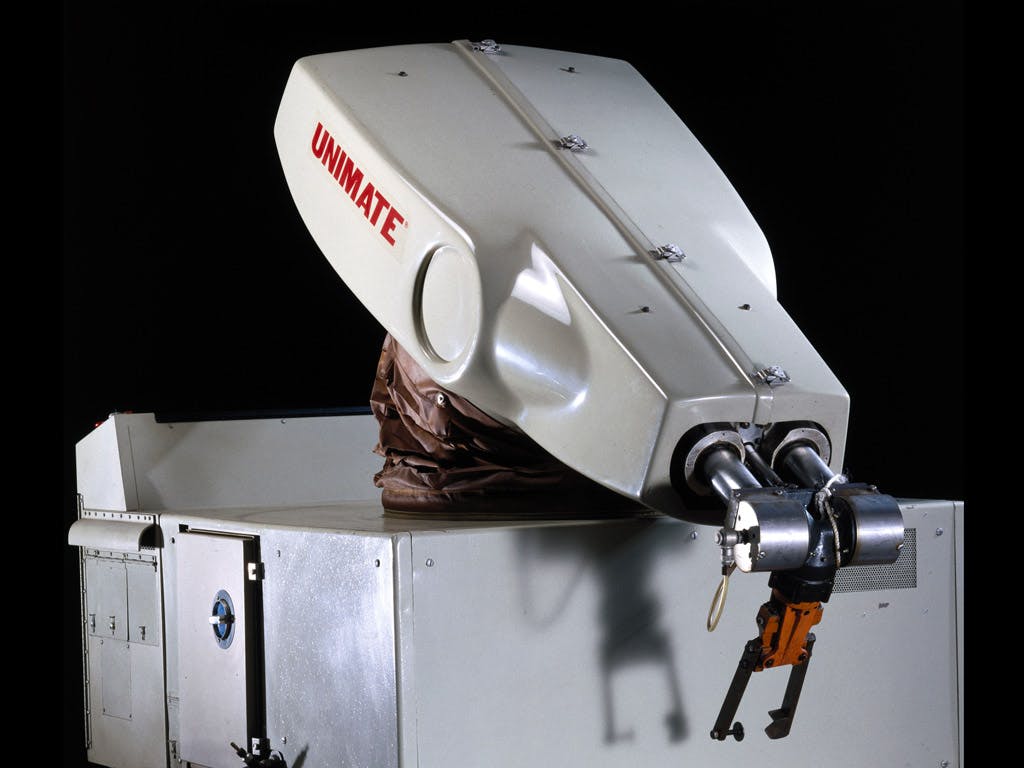

The history of robotics is deeply rooted in the history of industrialization. The Unimate, for instance, was the first ever industrial robot. It was developed by George Devol and Joseph Engelberger specifically for General Motors in the fifties, and within the next couple of years was featured on assembly line floors, doing tasks like spot welding or handling hot metal parts in car manufacturing.

The large industry budgets and iterative cycles from manufacturing applications gave the field of robotics a terrific canvas on which it could apply many of its theoretical ideas.

Source: IEEE

Many of these ideas came from a branch of mathematics developed in the thirties called control theory. Control theory was concerned with mathematically describing the feedback mechanisms and optimization techniques which contributed to equilibrated systems. The ideas of control theory were instrumental in designing the mechanisms by which robots incorporate information from sensors to maintain stability and adapt to changes in environment.

The development of better physical primitives, like electric sensors and actuators, meant that this information could be communicated faster and more efficiently, in contrast to older and more rudimentary robotic systems which relies on hydraulic or pneumatic actuation. By the 1980s, robots had already become commonplace in factories, performing jobs like assembly, welding, and inspection, but it was not until rather recently that the proliferation of robots really took off.

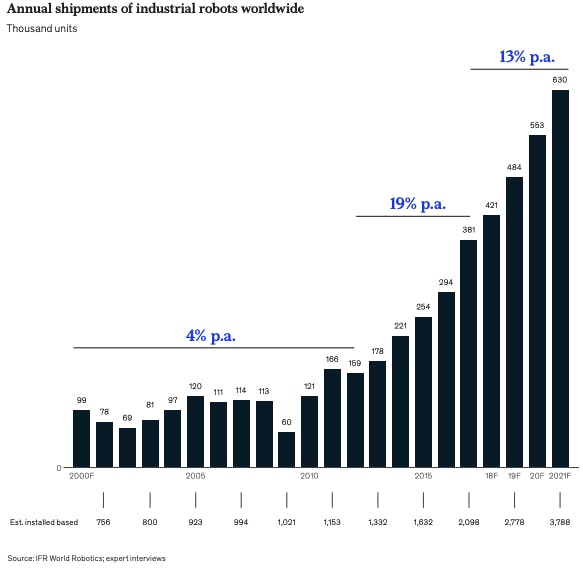

The graph of annual shipments of robotics hardware paints a picture of an industry that was seeing rather sluggish growth until just about a decade ago, when global labor prices began to increase while the cost of manufacturing electronics kept falling. In 2019 roughly $40 billion was spent globally on purchasing and installing industrial robotics equipment. All signs point to this only continuing to grow.

Source: McKinsey

The Future of Embodied Computation

The big outstanding challenge for robotics is teaching machines how to make independent decisions about physical movement. To date, most artificially intelligent systems have been trained entirely on text. As a result, those systems tend to be ill-prepared to deal with the physical inputs of the real world.

For instance, though large language models like GPT-3 are very good at writing cogent poems and essays, it is clear that they don’t know how the things they write about translate to the physical world. To demonstrate its shortcomings, two researchers asked ChatGPT a series of questions testing its physical reasoning abilities:

“When we asked GPT-3, an extremely powerful and popular artificial intelligence language system, whether you’d be more likely to use a paper map or a stone to fan life into coals for a barbecue, it preferred the stone. To smooth your wrinkled skirt, would you grab a warm thermos or a hairpin? GPT-3 suggested the hairpin. And if you need to cover your hair for work in a fast-food restaurant, which would work better, a paper sandwich wrapper or a hamburger bun? GPT-3 went for the bun.”

A number of roboticists believe the reason for this is that embodiedness is a precondition for developing general intelligence. For instance, Boyuan Chen, a robotics professor at Duke University, has said “I believe that intelligence can’t be born without having the perspective of physical embodiments.”

However, there’s still a powerful camp that believes large language models haven’t yet reached their full potential. Though GPT-3 failed at spatial reasoning, GPT-4 answered all the same questions correctly. It knew that paper was better at fanning coals than a stone was and that a sandwich wrapper works better as a hat than a hamburger bun. Notably, GPT-4 was trained on a combination of text and images, which suggests that language models could still get a lot better if only given a little more context about the text they’re manipulating. These two perspectives outline the two main approaches to teaching robots how to behave in unstructured environments; hierarchical learning, and language-based learning.

Hierarchical learning builds off of the intuition that any complex task, like washing the dishes, is composed of a series of simpler tasks organized in a hierarchical order. Washing the dishes involves picking up a dish, scrubbing it with soap, rinsing it with water and then drying it in precisely that order. Each of those sub-steps is itself composed of smaller tasks in turn. Picking up a dish, for example, might involve identifying the dish, moving toward the dish, and using the appropriate arm movements to pick it up effectively without dropping it.

Stefan Schaal at the University of Southern California has developed one of the best known frameworks to teach robots motor skills through hierarchical learning. Known as the dynamic movement primitives approach, it amounts to showing robots a number of primitive movements, which when combined with reinforcing techniques, eventually teaches robots to combine the movements together to achieve more complex goals.

One of the biggest problems with this approach, however, is that it’s inefficient. Artificially intelligent systems require lots of examples in order to learn, which means large data sets are needed to train them. This means teaching robots using movement primitives involves a lot of time instructing them on how to perform basic movements and repeating those over and over. Given that each robot has a unique physical structure, there is limited transferability across robots with other form factors. For them, the entire learning process would need to be repeated.

The time demands of this learning approach, so far, have resulted in the ultimate failure of many of the commercial programs that adopted it. Notably, OpenAI once had a robotics team that achieved the notable success of training a robot hand to solve a Rubik’s cube. However, given the limited transferability of this knowledge, and the more rapid development of large language models, OpenAI shuttered its robotics team in 2021.

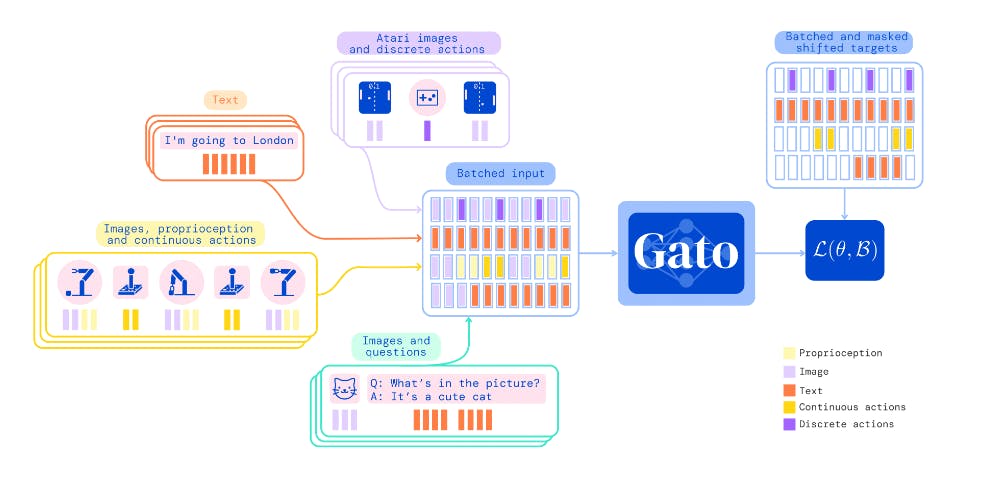

Language-based learning, on the other hand, begins with the insight that complex tasks could just as easily be broken down into simpler instructions by a sophisticated language model. In fact, breaking down the task of washing the dishes is an assignment that even GPT-3 could do very well. Then, training robots on a combination of text, images, and movement primitives would ideally help the robots learn how to combine the primitives together more efficiently to accomplish tasks. Eventually, these systems learn to use discrete or continuous actions as tokens to create meaningful sequences, just as GPT-3 uses words as tokens to create meaningful sentences.

This approach is the most novel approach to training robots and has taken off in recent years. DeepMind’s Gato is just one example of a successful implementation of this approach. This system, when attached to a number of different output sources, like a robotic arm or a video game controller, can decide whether to output joint movements, button presses, or other movements based on the context of the prompt. Taken together, the same system can play stack blocks or play Atari just as easily as it can recognize images and respond to queries.

Source: DeepMind

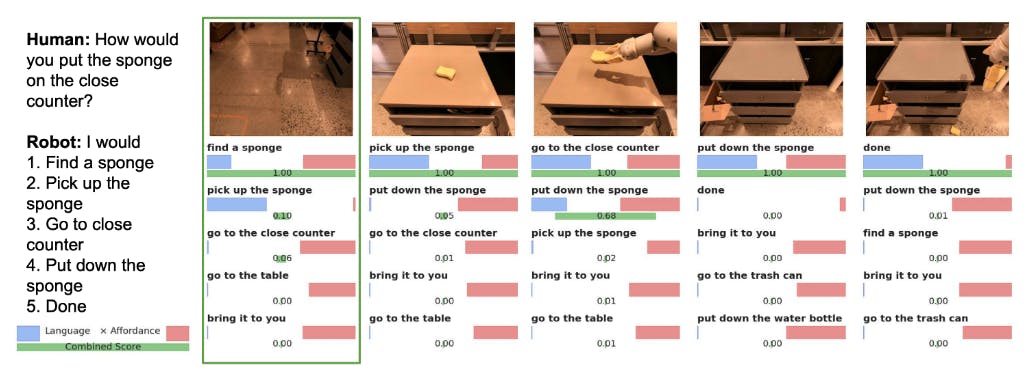

A research team at Google, in collaboration with Everyday Robots, developed a similar system called SayCan. The SayCan system works by grounding actions expressed in language in physical pre-trained behaviors. When the language model is combined with a robot, the robot acts as the language model’s hands and eyes. Whereas physical pre-training teaches the robot how to perform basic low-level tasks, insights from the attached language model teach the system higher-level knowledge about how to combine the low-level tasks into more complex functions.

Source: SayCan

Generalist Agents

Given the historic shortcomings of robots, roboticists think it’s premature to assign anthropomorphic features to them. Joe Jones, the inventor of the Roomba, for instance, has said that “when designers festoon their robots with anthropomorphic features, they are making a promise no robot can keep.” Though this has been the prevailing view among certain roboticists for years, these days we’re increasingly recognizing that robots do have some humanoid characteristics. In particular, computers can now talk just as we do.

Outside of the recent dash to retrofit existing robots with human-language communication, as seen with products like Moxie, a robot that uses GPT-3 to teach children, or Engineered Arts’ Ameca, which can speak and even do facial gestures, there seems to have been a rebirth in interest in building human-resembling robots.

For one thing, it looks like OpenAI is re-entering the robotics game given its recent investment in 1X Technologies, a company developing a new bipedal robot called Neo, which should be released in Summer 2023. Figure is yet another company currently working on building a “feature-complete electromechanical humanoid" with the ultimate goal of “integrat[ing] humanoids into the labor force.” Figure’s prototype of the “first commercially viable general purpose humanoid robot” currently stands at 5 foot 6 inches, and weighs 132 lb. Figure is hoping it will be able to lift 44 lbs, walk at a speed of roughly 2.7 mph, and operate for up to five hours on a single charge.

Source: 1X

Tesla Motors is yet another company taking a stab at building humanoid robots. On its investor day in 2023, Tesla unveiled its human-resembling robot Optimus, walking for the first time. The company hopes that Optimus, too, might one day enter the labor force. At one point, Elon Musk even said he thinks that Tesla’s robot business might one day supersede its car business. Given that Hyundai Motors is the owner of Boston Dynamics, it seems vehicle manufacturers are starting to become big robotics companies in disguise.

Given recent advances in language-based learning, robotics is once again rapidly evolving. As technology advances and costs decline, we can expect to see more types of robots becoming economically viable. With the constant expansion of the toolbox for robot designers, the possibilities for innovation are limitless.

The challenge now is to create a robot equivalent to human labor in cost and ability, either through the development of an AGI that can figure out how to make robots work or by training robots physically to achieve AGI. As we continue to push the boundaries of what robots can do, we must also consider the ethical implications of creating machines that could potentially replace human workers. Ultimately, the future of robotics holds great promise, but it is up to us to navigate the challenges ahead with foresight and responsibility.

Disclosure: Nothing presented within this article is intended to constitute legal, business, investment or tax advice, and under no circumstances should any information provided herein be used or considered as an offer to sell or a solicitation of an offer to buy an interest in any investment fund managed by Contrary LLC (“Contrary”) nor does such information constitute an offer to provide investment advisory services. Information provided reflects Contrary’s views as of a time, whereby such views are subject to change at any point and Contrary shall not be obligated to provide notice of any change. Companies mentioned in this article may be a representative sample of portfolio companies in which Contrary has invested in which the author believes such companies fit the objective criteria stated in commentary, which do not reflect all investments made by Contrary. No assumptions should be made that investments listed above were or will be profitable. Due to various risks and uncertainties, actual events, results or the actual experience may differ materially from those reflected or contemplated in these statements. Nothing contained in this article may be relied upon as a guarantee or assurance as to the future success of any particular company. Past performance is not indicative of future results. A list of investments made by Contrary (excluding investments for which the issuer has not provided permission for Contrary to disclose publicly, Fund of Fund investments and investments in which total invested capital is no more than $50,000) is available at www.contrary.com/investments.

Certain information contained in here has been obtained from third-party sources, including from portfolio companies of funds managed by Contrary. While taken from sources believed to be reliable, Contrary has not independently verified such information and makes no representations about the enduring accuracy of the information or its appropriateness for a given situation. Charts and graphs provided within are for informational purposes solely and should not be relied upon when making any investment decision. Please see www.contrary.com/legal for additional important information.