Computers are the most complex instruments produced by humans. Physically, they are merely miniaturized circuits, allowing electrons to selectively flow through architected pathways. However, the effect of this process is remarkable, producing devices capable of encoding and executing our most sophisticated schemes and ideas.

Seemingly, the more mastery we develop over digital brains, the more insights we develop into the workings of our own biological ones. The startling and uncanny symmetry between digital devices and biological brains began to produce many questions. For instance, could the two talk to each other? The personal computing revolution was an early attempt to answer this, enabled by smaller chips and novel interaction designs. Inventions like Douglas Engelbart’s mouse and Alan Kay’s Graphical User Interface (GUI) allowed us to communicate more intuitively through abstracted mediums.

As time went on, computer interfaces grew physically closer to the user until nearly everyone had a computer in the palm of their hand. Today, we are getting even closer still, as we can superimpose the graphical user interface onto our field of view through augmented reality.

However, we can go even further. We can eliminate abstracted interfaces entirely and create a direct link between brain and machine, translating cognitive impulse into machine output. This far more direct link is called a brain-computer interface.

This concept is no longer science fiction. Brain-computer interfaces are already here. As of 2023, there are around 50 people in the world with chips implanted in their brains, allowing them to retrieve lost faculties of vision, speech, and motor skills.

However, the full potential implied by a merger of our biological brains with a digital brain is far greater. It could allow us to extend our faculties far beyond what we were biologically endowed with. This could include everything from sensing in new ways to controlling a wide variety of things with our minds. Brain-computer interfaces show that, far from being over, the computing revolution has just begun.

Brain-Computer Interfaces: From Science Fiction to Reality

The human brain is one of the most mystifying objects known to modern science. The average human brain has close to 86 billion neurons — nearly as many stars as there are in the Milky Way. There are also nearly a quadrillion synapses, or connections, between neurons that allow messages to pass from one brain cell to the next. The brain’s kaleidoscopic complexity allows us to do everything; see, smell, walk, imagine, dream, and invent. What’s more, it only needs 20 watts to function. For comparison, a hair dryer uses 1,500.

We are still far from understanding how exactly the brain works. We don’t yet understand the brain’s complete structure, and we haven’t produced a complete map of all the neuronal pathways and synaptic connections. We haven’t even identified all the types of neuronal cells there are. Researchers think there are hundreds.

We have, however, learned how to record and mimic patterns of neural signaling in the brain. With these approximations alone, we’ve been able to perform wonders. By observing how the brain communicates with itself, researchers have discovered how to decode messages within the brain and communicate those messages to external technological systems. Already, we have succeeded at decoding the neural signaling patterns that can control the movement of a cursor on a screen, enabling us to do so with merely our thoughts. Elsewhere, reading from the brain has allowed people who have suffered from stroke or paralysis to operate prosthetic limbs with their minds.

While historically, the responsiveness of such systems and the latency involved in translating volition into machine output has been slow, recent years have witnessed incredible improvements. In August 2023, a woman who had suffered a stroke and lost the ability to speak opted to have a brain-computer interface surgically placed atop her brain’s cortex, directly over the region that once controlled her facial expressions and larynx.

The interface was composed of 250 electrodes which read from brain activity in that region. Then, artificial intelligence was used to establish relationships between the words she wanted to say and the activity happening in her brain. Once the system was calibrated, a computer was able to translate her thoughts to speech output at a rate of 78 words per minute, the fastest this has ever been done. Considering that average human speech occurs at a rate of 150 words per minute, this was a significant step forward.

What we’re seeing with brain-computer interfaces already is an exciting start, but it’s only the beginning. Presently, implanted BCIs require a lot of supervision. Each person’s brain patterns differ slightly, so custom techniques are required for each patient receiving treatment. Furthermore, until now, all BCIs have required a physical connection, via wire, between the implanted chip and the machine interpreting the signals. In other words, the experimental state of BCI technology is not yet scalable.

However, a number of companies are working towards making this technology more generalizable, and therefore, more accessible to patients who can benefit. On the path to generalizability, one of the biggest sources of concern is whether an implant can continue functioning inside a patient’s brain long-term. Hardware degrades over time, and this is one of the biggest question marks that needs to be put to bed through trials.

Early in 2024, Elon Musk’s BCI company, Neuralink, launched its first-ever human trial to answer precisely this question. The trial was approved by the FDA and has the goal of monitoring patients who volunteered for the trial over a period of at least five years. The goal of the implants is to enable patients to control computers via brain signals. Neuralink’s implant is also fully wireless, which is a milestone in BCI history.

Though many details about the trials are not publicly available, on February 19th, Elon Musk commented that the first implantation procedure had gone successfully, and the patient became capable of moving a cursor on their phone via their implant.

The extreme level of care and attention required to perform even one human trial belies how far this technology still has to go before it can be a realistic option for all who wish to benefit from its abilities to restore function. However, the positive news also signals that we are well on the way to a type of device that may one day work on any human, not only to restore lost functions but perhaps to enhance and even surpass the biological faculties we’ve been endowed with.

A Brief History of the Brain

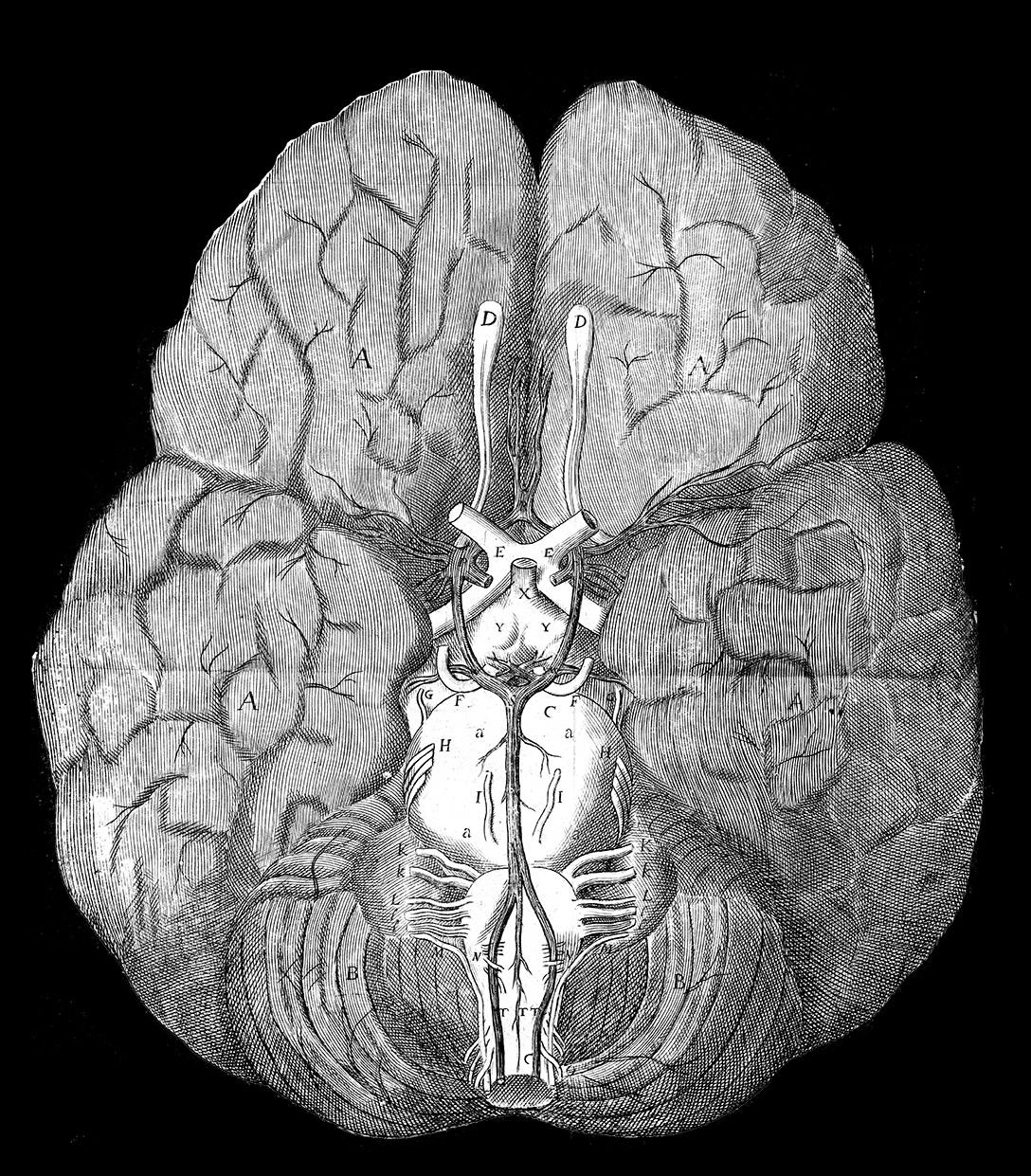

Neuroscience, just like computing, is one of the newest scientific domains. It was only a few hundred years ago that we identified the brain as the center of cognition. Prior to that, questions about the home of the soul were mostly left to clerics. Carl Zimmer’s book “The Soul Made Flesh” details how at the dawn of the Enlightenment, a handful of men, Thomas Willis chief among them, were the first to exhume an intact human brain from the corpse of a nobleman.

The meticulous study of this strange organ began then and there, ushering in what Zimmer calls the “Neurocentric Age.” Since then, physicians paid close attention to the folds in the brain. Many through the seventeenth and eighteenth centuries had hunches that the distinct sections separated by peaks and troughs corresponded to distinct functions.

Source: History of Science, Anatomy of the Brain by Thomas Willis

Eventually, in the mid-nineteenth century, a physician by the name of Paul Broca confirmed this hunch. Two patients were brought to his attention, both of whom had lost their ability to speak. The first was a 21-year-old who could only muster uttering a single word, while clearly retaining his cognitive abilities. The second was an 84-year-old who was being treated for dementia. He, too, could say no more than a few simple words including “yes” and “no”. After the death of these men, Broca performed autopsies on them and identified lesions in the same exact region on the left frontal lobe. Broca concluded this must be the center of the brain responsible for speech.

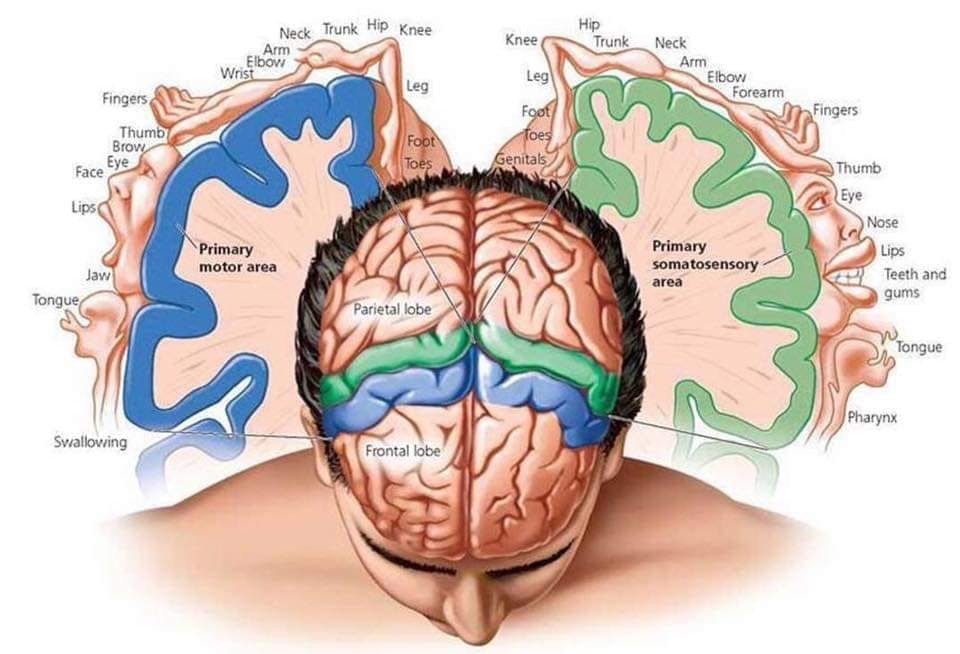

It would take another seventy years before a more complete map of our sensing and motor functions could be tied to distinct regions within the brain. The man who accomplished this was Wilder Penfield, a Canadian neurosurgeon, who had developed a method called the “Montreal Procedure” in the 1930s. This procedure consisted of removing a portion of a patient’s skull while they were still awake.

Penfield’s patients had epilepsy. He hypothesized that by removing damaged regions of the brain, the patients seizing spells could be cured. In many cases, the surgery did work, and though it sounds grotesque, it was relatively painless for patients since there are no pain receptors in the brain itself. Having a conscious patient while he had direct access to their brains as they were being operated upon allowed Penfield to probe various regions with a small electrode, and observe the effects. After numerous such surgeries, in 1937, Penfield released a report on his discoveries that showed how the very surface of the human brain, the cortex, addresses nearly every motor or sensory function we are capable of.

Source: Oren Gottfried

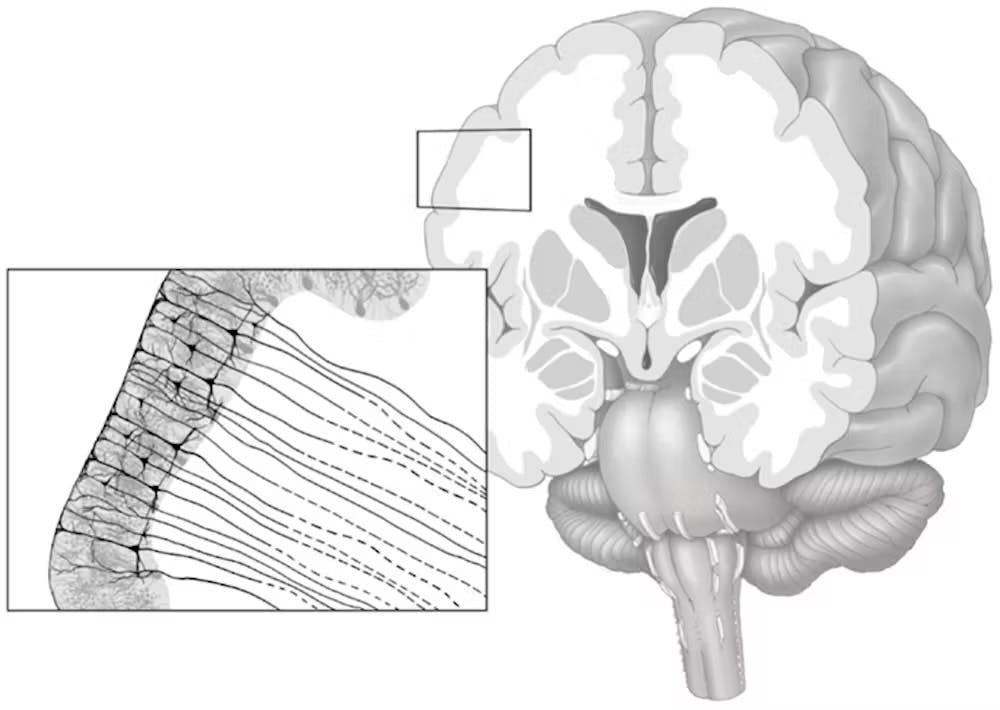

Today, we know that this cortex is divided into seven distinct lobes: the frontal, central, parietal, occipital, insular, temporal, and limbic. Each lobe is composed of groupings of neurons with specialized functions, though there is still a great deal of overlap between these functions, which makes untangling the workings of the brain rather difficult.

Using the term “lobe” might imply that the entire chunk of the brain carries out these functions when in fact, what we call the cortex is merely a two-millimeter layer on the very top of the brain — where the surface area is greatest, and therefore, can harbor the greatest number of neurons.

Source: The Conversation

Reading & Writing to the Brain

It’s rather lucky for us that all the important activity in the brain happens right up at the surface, and not in some inner recesses that are extremely difficult to get to, with functions we still don’t wholly understand.

Alessandro Volta, who discovered biological electricity when he made a frog’s leg twitch using an anode and a cathode produced a breakthrough in our understanding of how impulses travel through the body. Nearly 150 years later, in 1924, Hans Berger invented something called an electroencephalogram (EEG), which was a contraption that placed electrodes on the surface of the head to measure electrical impulses in the brain.

Source: Proto Mag

Roughly fifty years later, Jacques Vidal at UCLA began studying how EEG recordings like this could be used to manipulate external objects or prosthetics. He was the first to coin the term “brain-computer interface” and launched a whole field of applied neuroscience.

The EEG is still used today, as it is the most non-invasive way of reading from the brain and its activities. In fact, in May 2023, a gaming enthusiast turned an EEG into a game controller, allowing her to play Elden Ring with only her thoughts. In general, however, EEGs have rather limited reading ability of brain activity. The signals must travel through the skull before reaching the device, which blurs the reading, and it has essentially no ability to distinguish which neurons are actually firing within the brain. This means the best it can do is record activity within broad regions of the brain, those comprising millions or billions of neurons.

Source: IEEE Spectrum

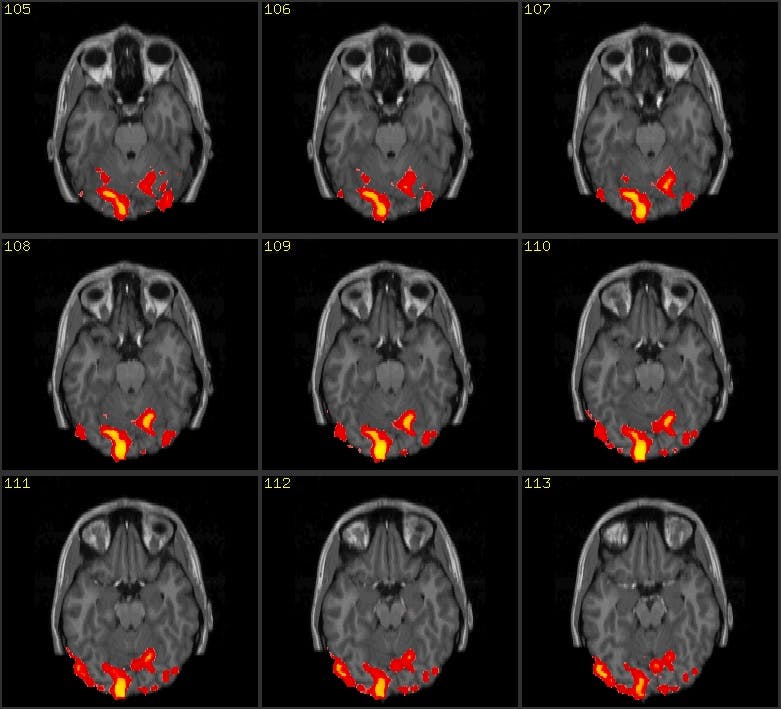

A slightly more precise tool to see the brain’s activity is functional magnetic resonance imaging (fMRI). An fMRI doesn’t record electrical signals, but rather it registers changes associated with blood flow within the brain. This correlates well with where activity is happening in the brain when it’s doing various tasks, as areas that are expending energy to send signals will need a larger influx of blood. The problem is that blood flows far slower than neurons actually fire, so the fMRI is more valuable as an observational tool than anything else. That said, it can achieve greater resolution than an EEG device, showing the activity within a group of only 40,000 neurons or so.

Source: California State University

Of course, both EEG and fMRI give us the ability to read data from the brain’s activity, but neither of them allows us to write data to the brain. Doing so requires invasive technologies.

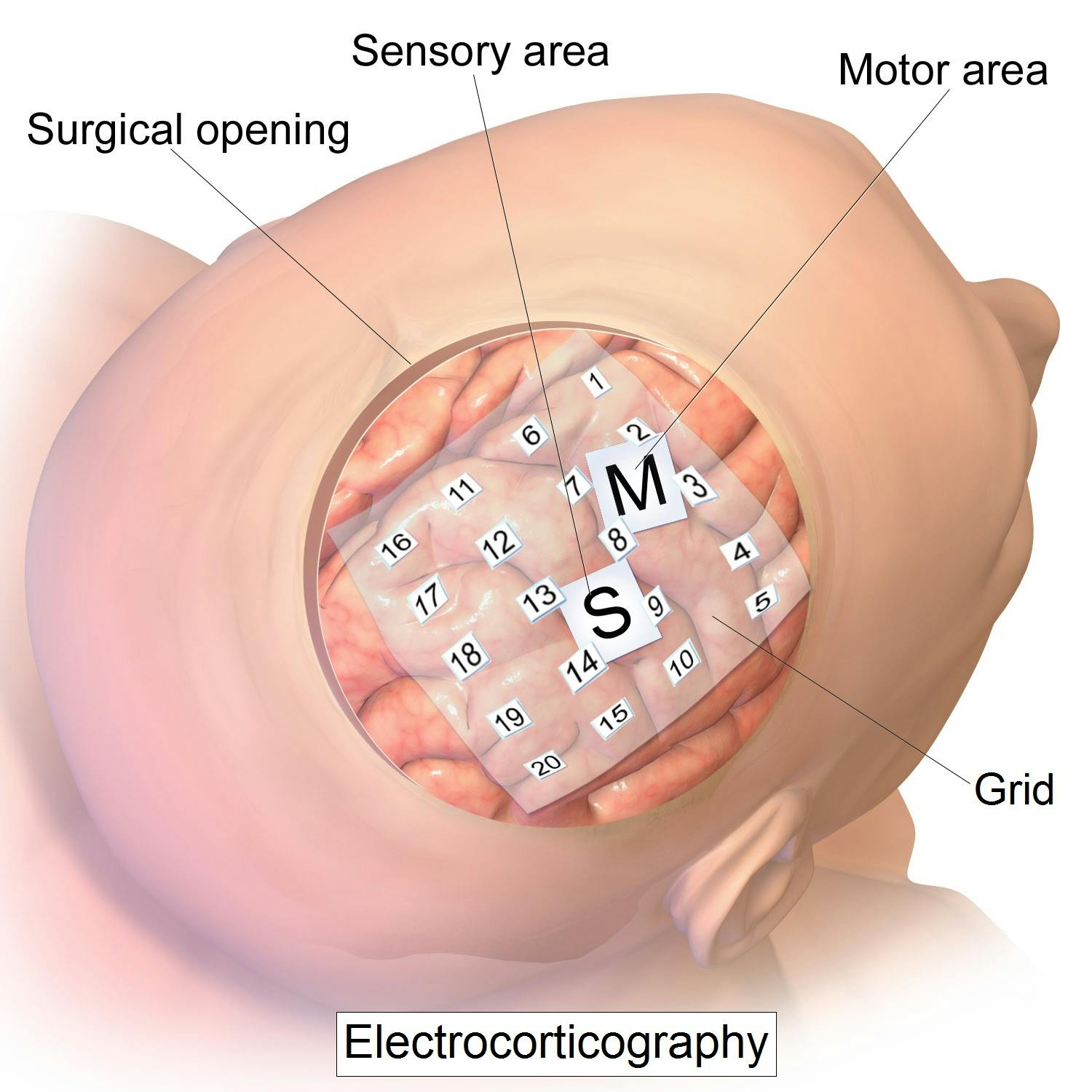

An electrocorticogram or ECoG, for instance, is similar to an EEG, but rather than putting the electrodes on top of a patient’s skull, they are placed directly on top of the cortex. This allows for faster detection of activity, though resolution at the neuronal level is still very small.

Source: Blausen Staff (2014)

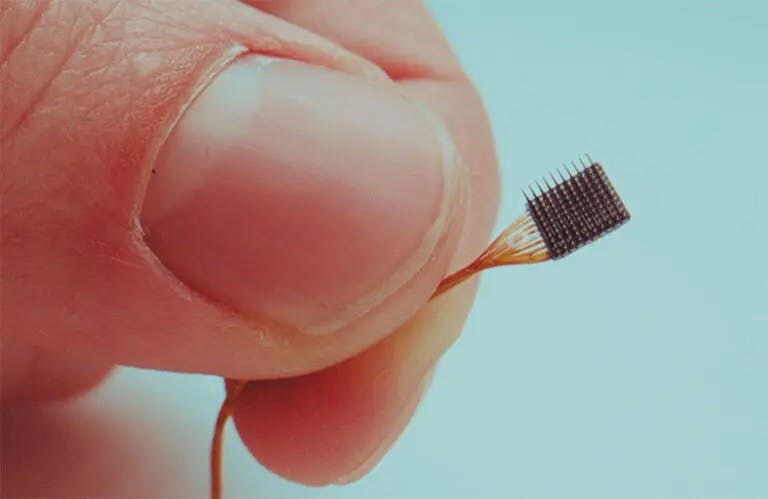

In an ideal world, we could read what every neuron in the brain was doing to get the most accurate understanding of how the brain signals its intentions. Neurons, however, are incredibly small. The main cell body, or “soma", of a neuron is about 10 microns wide, and there are over 20 billion of them packed into the cortex.

To get greater precision in interpreting neuronal signaling, we need to go into the brain. This is achieved with what’s called an intra-cortical micro-electrode array. Tiny needles, with an electrode tip only a few microns across, are assembled on an implant, resembling a chip, and inserted one to two millimeters deep into the cortex. These tiny electrodes can pick up much more nuanced signals from the neurons immediately surrounding them to give a complex picture of the activity in that region.

Source: Medical Design & Outsourcing

Human trials with microelectrode arrays from the early 2000s onward have shown that this technology has immense potential. The first BCI implanted into a human was called “BrainGate,” a microelectrode array that allowed Matt Nagle, a quadriplegic, to play Pong with his mind. Other trials tested out the ability of microelectrode arrays to stimulate the nerves in the cortex to produce haptic feedback. This meant that not only could these arrays allow patients to control prosthetics with their minds, but they could also interpret feedback from the prosthetic through that same implant. Patients who had lost limbs or undergone paralysis could suddenly report regaining the sensation of touch.

Of course, the range of functions any brain-computer interface is capable of translating into machine output relies on having a wide set of possible inputs from brain activity. In other words, if the interface can only detect a limited range of signals, it can only produce a limited number of outputs. Practically, this might mean the difference between a prosthetic limb that can be raised up and down, versus a limb that has full, dexterous control of its digits. Though the former is already a reality today, the latter has not yet been demonstrated primarily because of the limited form factor of the micro-electrode array.

As we know from Penfield’s homunculus, the brain devotes an enormous amount of resources to manual dexterity, so to read from and speak to all these vast regions, a small electrode array won’t suffice. In a 2017 essay on Neuralink, blogger Tim Urban wrote that the main bottleneck for producing more powerful BCIs is bandwidth. “We need higher bandwidth if this is gonna become a big thing. Way higher bandwidth,” he says.

Urban interviewed the Neuralink team and found they thought an interface that could interface with the brain holistically would need something like “one million simultaneously recorded neurons.” At the time Urban wrote the piece, the most sensitive implant had about 500 electrodes. Seven years later, in 2024, Neuralink’s human trial implant had doubled that, with an array consisting of 1024 electrodes.

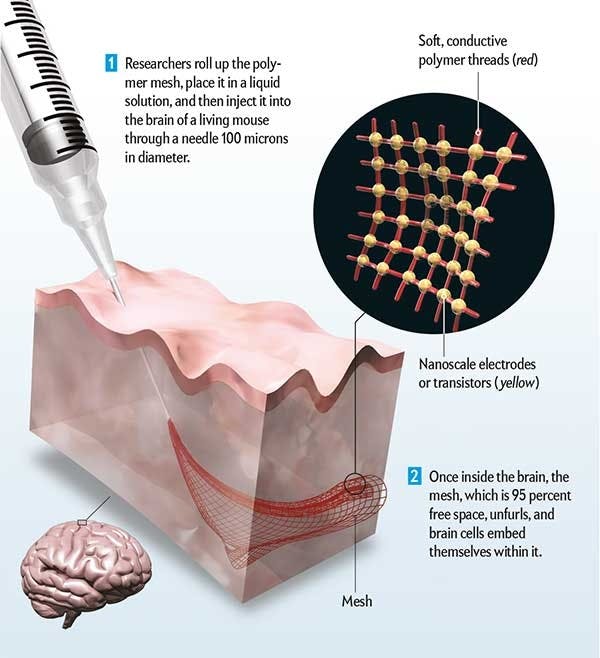

Although distinct arrays placed atop strategic regions in the cortex have proved valuable in helping restore targeted faculties, to communicate with a larger surface area of the brain, another form factor will be needed. There is already a great deal of fascinating research investigating what this could one day look like. DARPA has funded an effort to develop a miniature device with up to a million electrodes on its surface, while other ideas like producing a form of neural dust, consisting of small silicon sensors that could be distributed through the cortex and send signals to a receiving implant that could collect the messages.

The Future of Brain-Computer Interfaces

There still remain a tremendous number of challenges to be solved before brain-computer interfaces can even be broadly commercially available, let alone before the issues involving bandwidth can be resolved. However, Neuralink is making strides even here. One of Elon Musk’s main goals is to be able to perform implant insertion in a fully automated way. To this end, Neuralink has designed a robot that can perform such procedures, eliminating the need for a neurosurgeon and increasing the potential scalability of the technology.

It may be that in the distant future, a complicated, invasive surgery might not even be needed. Given advances in material science, it could be that flexible mesh arrays could be injected via the bloodstream and eventually find their way into the brain, hopefully offering both greater bandwidth and easier insertion. In a world like this, there is almost no limit to what brain-computer interfaces could enable humans to do.

Source: Scientific American

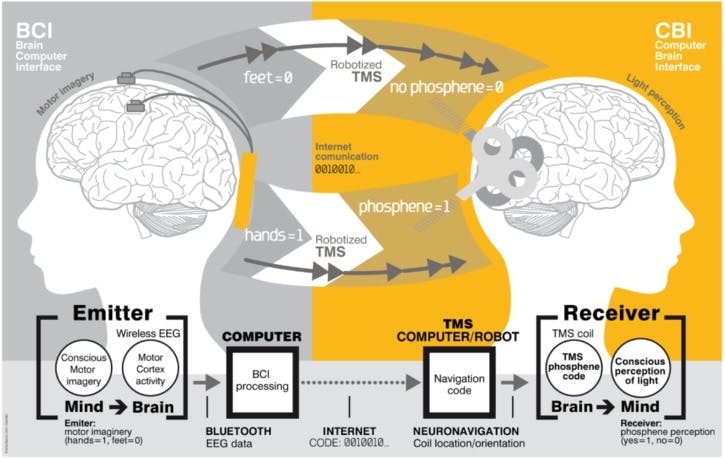

Interestingly, Hans Berger invented the EEG because he believed he had telepathically communicated with his sister after a traumatic accident. Nearly immediately after the accident, she had sent him a telegram indicating she was worried for him. Berger’s EEG didn’t prove the existence of telepathy, but it may well lead to us developing this ability in the long term. In fact, something resembling telepathic ability was already demonstrated in 2014, when two patients with brain implants transferred information between each other.

Source: Human Brain / Cloud Interface

Tim Urban remarks that sufficiently advanced BCIs can unlock a whole new way of communicating. Even today, we need to externalize and translate our thoughts into speech or text to transmit our ideas. However, a world where people’s brains can connect with each other could offer more fundamental options for exchange. Tim Urban imagines that, one day, people could simply beam their thoughts to others. Nuanced ideas wouldn’t need to pass through the filter of translation, they would simply arrive, fully formed, in the recipient’s mind.

Of course, not just telepathy, but telekinesis would be possible too. It’s not difficult to imagine a world of connected devices that could be commanded and directed merely by thought alone. Developing the ability would require training, practicing, and using the brain in new ways to control wirelessly connected appendages.

The brain, due to a phenomenon known as neuroplasticity, has a remarkable capacity for adapting to such uses. The neural signal pathways required to perform certain actions can change over time. This is also why everyone’s brain patterns are slightly different. They are fundamentally dynamic, changing with time and circumstance. Neuroplasticity suggests that theoretically, developing new abilities, like seeing in other wavelengths, hearing at much greater distances, and whatever else the advent of widespread brain-computer interfaces might enable, could indeed one day become a reality.

Already, a company called Brainport has demonstrated the power of human neuroplasticity in 2014. Patients who had lost their vision due to stroke could translate images collected by a camera through an electrode array placed on their tongue — over time, allowing the patients to learn to see through their tongues.

Source: Lee et al., 2014a

The future of brain-computer interfaces holds the promise of unlocking unprecedented possibilities, ranging from enhanced communication to the development of new sensory capabilities and more. While there are still fascinating challenges to solve, the ongoing advancements in technology and our understanding of the brain suggest that we may be on the brink of a transformative era in human-computer interaction.

Disclosure: Nothing presented within this article is intended to constitute legal, business, investment or tax advice, and under no circumstances should any information provided herein be used or considered as an offer to sell or a solicitation of an offer to buy an interest in any investment fund managed by Contrary LLC (“Contrary”) nor does such information constitute an offer to provide investment advisory services. Information provided reflects Contrary’s views as of a time, whereby such views are subject to change at any point and Contrary shall not be obligated to provide notice of any change. Companies mentioned in this article may be a representative sample of portfolio companies in which Contrary has invested in which the author believes such companies fit the objective criteria stated in commentary, which do not reflect all investments made by Contrary. No assumptions should be made that investments listed above were or will be profitable. Due to various risks and uncertainties, actual events, results or the actual experience may differ materially from those reflected or contemplated in these statements. Nothing contained in this article may be relied upon as a guarantee or assurance as to the future success of any particular company. Past performance is not indicative of future results. A list of investments made by Contrary (excluding investments for which the issuer has not provided permission for Contrary to disclose publicly, Fund of Fund investments and investments in which total invested capital is no more than $50,000) is available at www.contrary.com/investments.

Certain information contained in here has been obtained from third-party sources, including from portfolio companies of funds managed by Contrary. While taken from sources believed to be reliable, Contrary has not independently verified such information and makes no representations about the enduring accuracy of the information or its appropriateness for a given situation. Charts and graphs provided within are for informational purposes solely and should not be relied upon when making any investment decision. Please see www.contrary.com/legal for additional important information.